23 Jan 2023

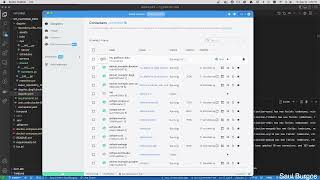

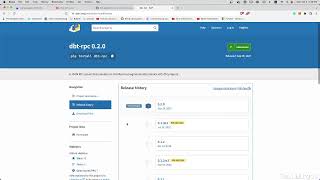

In this video, Ben Pankow, Software Engineer at Elementl, will show you how to streamline your process using dagster to manage your Airbyte connections and orchestrate syncs with downstream computation using DBT. He'll take you step-by-step through setting up Airbyte with Dagster from scratch, so even if you're new to these tools, you'll be able to follow along. And for those who are more experienced, we'll also show you how dagster and Airbyte can elevate your project to new heights. Don't miss out on this game-changing demo.

Subscribe to our newsletter: https://airbyte.com/newsletter?utm_source=youtube

Learn more about Airbyte: https://airbyte.com

#dataorchestration #data #communitycall

Subscribe to our newsletter: https://airbyte.com/newsletter?utm_source=youtube

Learn more about Airbyte: https://airbyte.com

#dataorchestration #data #communitycall

- 3 participants

- 21 minutes

23 Jan 2023

A productionalized notebook integrated with an orchestration platform provides an excellent balance of reproducibility, flexibility, and intent in a way that will be quickly consumable. This tutorial is valuable to data scientists and data engineers. This setup makes it easy to take notebooks from exploratory to production, but even easier to debug and ensure quality over time. This tutorial will show how you can achieve:

Time-saving in initiating jobs: Allowing users to seamlessly transition an exploratory workflow created within a Noteable notebook, into a productionalized scheduled workflow in Dagster.

Time and Cost Saving for debugging failed runs: Allowing users to immediately dive into a live running notebook at the point of failure, with all of the in-memory state preserved. This saves the users' time, as well as saves companies' compute costs by not requiring debugging to re-execute previous steps of the workflow.

Bios:

Pierre Brunelle

Pierre Brunelle is the CEO and Co-Founder of Noteable, a collaborative data notebook that enables data-driven teams to use and visualize data, together. Prior to Noteable, Pierre led Amazon’s notebook initiatives both for internal use as well as for SageMaker. He also worked on many open source initiatives including a standard for Data Quality work and an open source collaboration between Amazon and UC Berkeley to advance AI and machine learning. Pierre helped launch the first Amazon online car leasing store in Europe. At Amazon Pierre also launched a Price Elasticity Service and pushed investments in Probabilistic Programming Frameworks. And Pierre represented Amazon on many occasions to teach Machine Learning or at conferences such as NeurIPS. Pierre also writes about Time in Organization Studies. Pierre holds an MS in Building Engineering from ESTP Paris and an MRes in Decision Sciences and Risk Management from Arts et Métiers ParisTech.

Jamie DeMaria

Jamie is a software engineer working on Dagster. She has also built data analysis tools (using Dagster!) for a robotics startup and developed software to train mission planners for the Mars Curiosity rover.

===

www.pydata.org

PyData is an educational program of NumFOCUS, a 501(c)3 non-profit organization in the United States. PyData provides a forum for the international community of users and developers of data analysis tools to share ideas and learn from each other. The global PyData network promotes discussion of best practices, new approaches, and emerging technologies for data management, processing, analytics, and visualization. PyData communities approach data science using many languages, including (but not limited to) Python, Julia, and R.

PyData conferences aim to be accessible and community-driven, with novice to advanced level presentations. PyData tutorials and talks bring attendees the latest project features along with cutting-edge use cases.

00:00 Welcome!

00:10 Help us add time stamps or captions to this video! See the description for details.

Want to help add timestamps to our YouTube videos to help with discoverability? Find out more here: https://github.com/numfocus/YouTubeVideoTimestamps

Time-saving in initiating jobs: Allowing users to seamlessly transition an exploratory workflow created within a Noteable notebook, into a productionalized scheduled workflow in Dagster.

Time and Cost Saving for debugging failed runs: Allowing users to immediately dive into a live running notebook at the point of failure, with all of the in-memory state preserved. This saves the users' time, as well as saves companies' compute costs by not requiring debugging to re-execute previous steps of the workflow.

Bios:

Pierre Brunelle

Pierre Brunelle is the CEO and Co-Founder of Noteable, a collaborative data notebook that enables data-driven teams to use and visualize data, together. Prior to Noteable, Pierre led Amazon’s notebook initiatives both for internal use as well as for SageMaker. He also worked on many open source initiatives including a standard for Data Quality work and an open source collaboration between Amazon and UC Berkeley to advance AI and machine learning. Pierre helped launch the first Amazon online car leasing store in Europe. At Amazon Pierre also launched a Price Elasticity Service and pushed investments in Probabilistic Programming Frameworks. And Pierre represented Amazon on many occasions to teach Machine Learning or at conferences such as NeurIPS. Pierre also writes about Time in Organization Studies. Pierre holds an MS in Building Engineering from ESTP Paris and an MRes in Decision Sciences and Risk Management from Arts et Métiers ParisTech.

Jamie DeMaria

Jamie is a software engineer working on Dagster. She has also built data analysis tools (using Dagster!) for a robotics startup and developed software to train mission planners for the Mars Curiosity rover.

===

www.pydata.org

PyData is an educational program of NumFOCUS, a 501(c)3 non-profit organization in the United States. PyData provides a forum for the international community of users and developers of data analysis tools to share ideas and learn from each other. The global PyData network promotes discussion of best practices, new approaches, and emerging technologies for data management, processing, analytics, and visualization. PyData communities approach data science using many languages, including (but not limited to) Python, Julia, and R.

PyData conferences aim to be accessible and community-driven, with novice to advanced level presentations. PyData tutorials and talks bring attendees the latest project features along with cutting-edge use cases.

00:00 Welcome!

00:10 Help us add time stamps or captions to this video! See the description for details.

Want to help add timestamps to our YouTube videos to help with discoverability? Find out more here: https://github.com/numfocus/YouTubeVideoTimestamps

- 5 participants

- 1:25 hours

8 Dec 2022

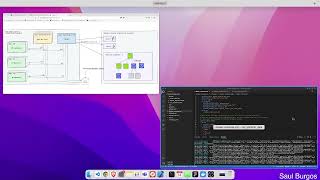

This is an ML pipeline with both CI & CD components*.

The story today is about CD - Continuous Deployment**. I’m assuming as the Data Scientist I don’t have access to the production environment (no “one-click deploy to prod” for me)***.

I develop my model (new feature branch) locally, using our dev database and local compute. I track experiments with MLflow and orchestrate my code with Dagster.

Once satisfied with my new model, I do the following to deploy:

- I check my code into a feature-branch in source control

- Open a pull request to the dev branch of our code base

- Kick back and watch

This starts a CI job via Github actions that:

- Builds my project

- Test my code

- Deploys to dev environment (teeny little deployment :)

If successful, another team member will merge my code into the dev branch.

Upon the merge into dev, this automatically triggers (dare I say, continuously) a deployment job:

- Deploys my code to Staging

- In my case, this job re-runs my ML pipeline and tests on staging data

- The benefit here is that typically staging data is closer to production data than whatever I was using in dev

If successful, this job initiates a manual review process for deployment to prod:

- Prompts an admin to review my code and choose whether to run the final job in the workflow: deploying to production.

- As the admin, I approve the deployment and the CD jobs finishes by training and deploying the model in prod.

Lots of hand-waving in this example, but I hope it helps show the git-based workflow moving between environments and the larger theme of the significant work required to actually deploy an ML project.

* This is part of my ongoing saga is to get closer to something resembling an actual production deployment instead of the notebook-based fit/predict/API patterns you tend to see

** I have a tendency of using CI/CD interchangeably (read: incorrectly). Setting up this example has really helped clarify where

*** In my examples, I only move code between environments, never models. This is the pattern I see most often with ML teams. It’s possible you might deploy your model from dev to staging to prod

Continual - we're lucky to work with ML teams that care about software engineering best practices. If that sounds like you, please hit us up.

#python #ml #dagster #mlflow

Feel free to connect with me on LI: https://www.linkedin.com/in/gustafrcavanaugh/

The story today is about CD - Continuous Deployment**. I’m assuming as the Data Scientist I don’t have access to the production environment (no “one-click deploy to prod” for me)***.

I develop my model (new feature branch) locally, using our dev database and local compute. I track experiments with MLflow and orchestrate my code with Dagster.

Once satisfied with my new model, I do the following to deploy:

- I check my code into a feature-branch in source control

- Open a pull request to the dev branch of our code base

- Kick back and watch

This starts a CI job via Github actions that:

- Builds my project

- Test my code

- Deploys to dev environment (teeny little deployment :)

If successful, another team member will merge my code into the dev branch.

Upon the merge into dev, this automatically triggers (dare I say, continuously) a deployment job:

- Deploys my code to Staging

- In my case, this job re-runs my ML pipeline and tests on staging data

- The benefit here is that typically staging data is closer to production data than whatever I was using in dev

If successful, this job initiates a manual review process for deployment to prod:

- Prompts an admin to review my code and choose whether to run the final job in the workflow: deploying to production.

- As the admin, I approve the deployment and the CD jobs finishes by training and deploying the model in prod.

Lots of hand-waving in this example, but I hope it helps show the git-based workflow moving between environments and the larger theme of the significant work required to actually deploy an ML project.

* This is part of my ongoing saga is to get closer to something resembling an actual production deployment instead of the notebook-based fit/predict/API patterns you tend to see

** I have a tendency of using CI/CD interchangeably (read: incorrectly). Setting up this example has really helped clarify where

*** In my examples, I only move code between environments, never models. This is the pattern I see most often with ML teams. It’s possible you might deploy your model from dev to staging to prod

Continual - we're lucky to work with ML teams that care about software engineering best practices. If that sounds like you, please hit us up.

#python #ml #dagster #mlflow

Feel free to connect with me on LI: https://www.linkedin.com/in/gustafrcavanaugh/

- 1 participant

- 7 minutes

27 Sep 2022

Today we will be interviewing EvolutionIQ and why they decided to use Dagster

Tomas Vykruta - https://www.linkedin.com/in/tvykruta/

Karan Uppal - https://www.linkedin.com/in/karanuppal/

If you enjoyed this video, check out some of my other top videos.

Top Courses To Become A Data Engineer In 2022

https://www.youtube.com/watch?v=kW8_l57w74g

What Is The Modern Data Stack - Intro To Data Infrastructure Part 1

https://www.youtube.com/watch?v=-ClWgwC0Sbw

If you would like to learn more about data engineering, then check out Googles GCP certificate

https://bit.ly/3NQVn7V

If you'd like to read up on my updates about the data field, then you can sign up for our newsletter here.

https://seattledataguy.substack.com/

Or check out my blog

https://www.theseattledataguy.com/

And if you want to support the channel, then you can become a paid member of my newsletter

https://seattledataguy.substack.com/subscribe

Tags: Data engineering projects, Data engineer project ideas, data project sources, data analytics project sources, data project portfolio

_____________________________________________________________

Subscribe: https://www.youtube.com/channel/UCmLGJ3VYBcfRaWbP6JLJcpA?sub_confirmation=1

_____________________________________________________________

About me:

I have spent my career focused on all forms of data. I have focused on developing algorithms to detect fraud, reduce patient readmission and redesign insurance provider policy to help reduce the overall cost of healthcare. I have also helped develop analytics for marketing and IT operations in order to optimize limited resources such as employees and budget. I privately consult on data science and engineering problems both solo as well as with a company called Acheron Analytics. I have experience both working hands-on with technical problems as well as helping leadership teams develop strategies to maximize their data.

*I do participate in affiliate programs, if a link has an "*" by it, then I may receive a small portion of the proceeds at no extra cost to you.

Tomas Vykruta - https://www.linkedin.com/in/tvykruta/

Karan Uppal - https://www.linkedin.com/in/karanuppal/

If you enjoyed this video, check out some of my other top videos.

Top Courses To Become A Data Engineer In 2022

https://www.youtube.com/watch?v=kW8_l57w74g

What Is The Modern Data Stack - Intro To Data Infrastructure Part 1

https://www.youtube.com/watch?v=-ClWgwC0Sbw

If you would like to learn more about data engineering, then check out Googles GCP certificate

https://bit.ly/3NQVn7V

If you'd like to read up on my updates about the data field, then you can sign up for our newsletter here.

https://seattledataguy.substack.com/

Or check out my blog

https://www.theseattledataguy.com/

And if you want to support the channel, then you can become a paid member of my newsletter

https://seattledataguy.substack.com/subscribe

Tags: Data engineering projects, Data engineer project ideas, data project sources, data analytics project sources, data project portfolio

_____________________________________________________________

Subscribe: https://www.youtube.com/channel/UCmLGJ3VYBcfRaWbP6JLJcpA?sub_confirmation=1

_____________________________________________________________

About me:

I have spent my career focused on all forms of data. I have focused on developing algorithms to detect fraud, reduce patient readmission and redesign insurance provider policy to help reduce the overall cost of healthcare. I have also helped develop analytics for marketing and IT operations in order to optimize limited resources such as employees and budget. I privately consult on data science and engineering problems both solo as well as with a company called Acheron Analytics. I have experience both working hands-on with technical problems as well as helping leadership teams develop strategies to maximize their data.

*I do participate in affiliate programs, if a link has an "*" by it, then I may receive a small portion of the proceeds at no extra cost to you.

- 3 participants

- 1:09 hours