30 Jul 2022

In the sixth lecture for Machine Learning Compilation, CMU professor Tianqi Chen discusses how to bring ML models from existing frameworks into a ML compilation flow. The core of today's lecture will focus on getting ML models into IRModule because we can introduce more kinds of transformations on primitive functions and computation graph functions. First you learn how to use the tensor expression domain-specific language to build a TensorIR function. In order to build end-to-end model executions, we'll then need to connect multiple TensorIR functions through a computation graph. So next you will learn how to programmatically build an IRModule using the Block Builder APIs. After learning these tools, you will see how they can be used to bring a PyTorch model into IRModule format, including using TorchFX to trace a graph from the PyTorch module.

Episode 6 Notes: https://mlc.ai/chapter_integration/index.html

Episode 6 Notebook, Integration with ML Frameworks: https://github.com/mlc-ai/notebooks/blob/main/6_Integration_with_Machine_Learning_Frameworks.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

Episode 6 Notes: https://mlc.ai/chapter_integration/index.html

Episode 6 Notebook, Integration with ML Frameworks: https://github.com/mlc-ai/notebooks/blob/main/6_Integration_with_Machine_Learning_Frameworks.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

- 1 participant

- 47 minutes

16 Jul 2022

In the fifth lecture for Machine Learning Compilation, CMU professor Tianqi Chen introduces the process of automation of transformations. After reviewing a manual transform of a primitive tensor function, we'll learn about stochastic schedule transformations which lets us add some randomness to our transformations. A deep dive into stochastic transformations follows, showing how the addition of random variables increases the space of possible execution programs. We'll explore different search algorithms to automatically find the fastest execution method, including comparing random search to smarter algorithms. Finally, Tianqi discusses Apache TVM's new meta schedule API that provides additional utilities to explore the search space, such as parallel benchmarking across many processes, using cost models to avoid repeated benchmarking and evolutionary search of traces to avoid random sampling. You'll learn how Metaschedule works under the hood - by analyzing each block's data access and loop patterns to propose stochastic transformations of the program.

Episode 5 Notebook, Automatic Program Optimization: https://github.com/mlc-ai/notebooks/blob/main/5_Automatic_Program_Optimization.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

Episode 5 Notebook, Automatic Program Optimization: https://github.com/mlc-ai/notebooks/blob/main/5_Automatic_Program_Optimization.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

- 2 participants

- 45 minutes

9 Jul 2022

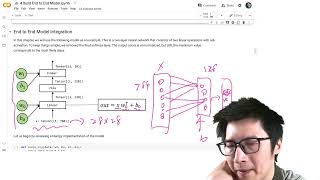

In the fourth lecture for Machine Learning Compilation, CMU professor Tianqi Chen covers building end to end models. Via the fashion MNIST dataset, you will learn how to use a two-layer neural network with two linear activations and RELU activation. The point of this lecture is not to simply learn how to code a simple 2-layer network, but rather to understand the details under the hood of these array computations with low-level numpy code to demonstrate loop computations and how they're optimized. You will see how to construct an end to end IRModule in TVMScript and understand its computation graph. This lecture also introduces Relax, a new type of abstraction representing high-level neural network executions.

Episode 4 Notes: https://mlc.ai/chapter_end_to_end/index.html

Episode 4 Notebook, End to End Model Execution: https://github.com/mlc-ai/notebooks/blob/main/4_Build_End_to_End_Model.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

Episode 4 Notes: https://mlc.ai/chapter_end_to_end/index.html

Episode 4 Notebook, End to End Model Execution: https://github.com/mlc-ai/notebooks/blob/main/4_Build_End_to_End_Model.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

- 1 participant

- 55 minutes

1 Jul 2022

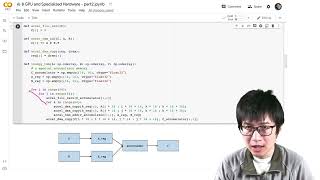

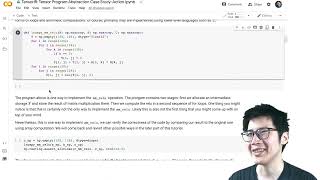

In the third lecture for Machine Learning Compilation, CMU professor Tianqi Chen covers a case study in tensor program abstraction with TensorIR. The primary purpose of tensor program abstraction is to represent loops and corresponding hardware acceleration choices such as threading, use of specialized hardware instructions, and memory access. TensorIR is a brand new low-level intermediate representation with full scheduling support for Apache TVM. You will learn how blocks, the basic unit of computation in TensorIR, generalize the high-dimension tensor expressions. Tianqi will cover several code examples to give you insights into how TensorIR works internally and how it performs transformations of primitive tensor functions.

Episode 3 Notes: https://mlc.ai/chapter_tensor_program/case_study.html

Episode 3 Notebook, Tensor Program Abstraction Case Study: TensorIR: https://github.com/mlc-ai/notebooks/blob/main/3_TensorIR_Tensor_Program_Abstraction_Case_Study_Action.ipynb

Episode 3 Exercises for TensorIR: https://mlc.ai/chapter_tensor_program/tensorir_exercises.html

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

Episode 3 Notes: https://mlc.ai/chapter_tensor_program/case_study.html

Episode 3 Notebook, Tensor Program Abstraction Case Study: TensorIR: https://github.com/mlc-ai/notebooks/blob/main/3_TensorIR_Tensor_Program_Abstraction_Case_Study_Action.ipynb

Episode 3 Exercises for TensorIR: https://mlc.ai/chapter_tensor_program/tensorir_exercises.html

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

- 1 participant

- 1:07 hours

25 Jun 2022

In the second lecture for Machine Learning Compilation, CMU professor Tianqi Chen covers tensor program abstraction - the abstraction for a single "unit" step of computation and the opportunities for machine learning compilation transformations in these abstractions. You will learn concepts such as multi-dimensional buffers, loop nests and computation statements and about transformations such as loop splitting, loop reorder and thread binding.

Episode 2 Slides: https://mlc.ai/summer22/slides/2-TensorProgram.pdf

Episode 2 Notes: https://mlc.ai/chapter_tensor_program/

Episode 2 Notebook, Tensor Program Abstraction in Action: https://github.com/mlc-ai/notebooks/blob/main/2_tensor_program_abstraction.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

Episode 2 Slides: https://mlc.ai/summer22/slides/2-TensorProgram.pdf

Episode 2 Notes: https://mlc.ai/chapter_tensor_program/

Episode 2 Notebook, Tensor Program Abstraction in Action: https://github.com/mlc-ai/notebooks/blob/main/2_tensor_program_abstraction.ipynb

What is ML Compilation?

As the first course of its kind in the world for ML compilation, in this series CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

- 1 participant

- 48 minutes

18 Jun 2022

An Introduction to Machine Learning Compilation (MLC). As the first course of its kind in the world for ML compilation, in this lecture CMU professor Tianqi Chen introduces why AI training and inference workloads need ML compilation to transform and optimize ML models from their development state in frameworks like PyTorch and TensorFlow to their deployment form on CPUs and GPUs. MLC helps solve the problem of combinatorial explosion of ML models and deployment hardware platforms.

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

The course requires a minimum set of prerequisites in data science and machine learning:

- Python, familiarity with numpy

- Some background in one deep learning framework (e.g. PyTorch, TensorFlow, JAX)

- Experiences in system programming (e.g. C/CUDA) would be beneficial but not required

Episode 1 Slides: https://mlc.ai/summer22/slides/1-Introduction.pdf

Episode 1 Notes: https://mlc.ai/chapter_introduction/

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

This course is targeted not just for for undergraduate and graduate students but also people putting ML to use - data scientists, ML engineers and hardware providers. It covers ML programming abstractions, learning-driven search, compilation, and optimized library runtimes. These themes form a new field of ML systems – machine learning compilation.

In this course, we offer the first comprehensive treatment of its kind to study key elements of this emerging field systematically. We will learn the key abstractions to represent machine learning programs, automatic optimization techniques, and approaches to optimize dependency, memory, and performance in end-to-end machine learning deployment. By completing this course, you will learn how to apply the latest developments in ML compilation to build models that can be optimized for emerging hardware stacks. This let you deploy your models efficiently - minimizing memory usage, reducing inference latency and scaling to multiple heterogeneous hardware nodes.

The course requires a minimum set of prerequisites in data science and machine learning:

- Python, familiarity with numpy

- Some background in one deep learning framework (e.g. PyTorch, TensorFlow, JAX)

- Experiences in system programming (e.g. C/CUDA) would be beneficial but not required

Episode 1 Slides: https://mlc.ai/summer22/slides/1-Introduction.pdf

Episode 1 Notes: https://mlc.ai/chapter_introduction/

Full course schedule: https://mlc.ai/summer22/schedule

Instructors:

- Tianqi Chen with Hongyi Jin (TA), Siyuan Feng (TA) and Ruihang Lai (TA)

- 1 participant

- 47 minutes