18 Oct 2021

Presented at KubeCon North America 2021 by Yuval Lifshitz & Huamin Chen

- 1 participant

- 17 minutes

11 May 2017

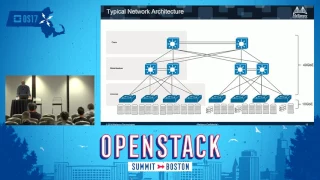

High performance networks now able to reach 100Gb/s along with advanced protocols like RDMA are making Ceph a main stream enterprise storage contender. Ceph has gained major traction for low end application but with a little extra focus on the network it can easily compete with the big enterprise storage players. Based on technologies originally developed for the High Performance Compute (HPC) industry, very fast networks and Remote Direct Memory Access(RDMA) protocol is now moving to the Enterp

- 8 participants

- 37 minutes

11 May 2017

A full project update on current work, future roadmap, and project contributions.

- 6 participants

- 41 minutes

11 May 2017

Ceph includes snapshot technology in most of its projects: the base RADOS layer, RBD block devices, and CephFS filesystem. This talk will briefly overview how snapshots are implemented in order to discuss their implications, before moving on to example use cases for operators. Learn how to use snapshots for backup and rollback-ability within RBD and CephFS, the benefits they bring over other mechanisms, how to use the new snapshot trim config options, and most importantly: how much they cost in

- 7 participants

- 39 minutes

11 May 2017

The OpenStack's Shared File Systems service, Manila, provides a modular framework for storage backends to securely export file shares through network separated data paths between tenants. CephFS, a POSIX-compliant distributed file system built on top of Ceph, is ready to leverage this multi-tenant framework to make it a cloud ready open source scalable storage backend that Manila lacks. It can serve NFS shares using NFS-Ganesha, a user-space NFS server. Recent updates to NFS-Ganesha and its inte

- 8 participants

- 42 minutes

11 May 2017

Traditional server architecture is not a perfect fit for distributed storage because network is usually saturated with low CPU utility. And using virtual machine or multi-daemons carries the risk of multiple lost storage devices when a single server fails. Ambedded aims to address those problems with its microserver architecture designed for distributed storage and optimizing Ceph performance with this 1-to-1 architecture.

- 1 participant

- 36 minutes

11 May 2017

The disrupted Intel(R) Optane SSDs based on 3D Xpoint technology fills the performance gap between DRAM and NAND based SSD while the Intel(R) 3D NAND TLC is reducing cost gap between SSD and traditional spindle hard drive and makes it possible for all flash storage. In this session, we will 1) Discuss OpenStack storage Ceph reference design on the first Intel Optane (3D Xpoint) and P4500 TLC NAND based all-flash Ceph cluster 2) Share Ceph BlueStore tunings and optimizations, latency analysis, TC

- 5 participants

- 33 minutes

11 May 2017

Ceph continues to be the leading block-storage-of-choice for Openstack VM deployments. Latency-sensitive workloads such as databases remain an emerging Ceph use case - latency guarantees typically a challenge in shared VM storage environments as compute/networked-storage resources cease to scale as VM density increases. With growing flash footprint on clients, flash-based read/write caching has the potential to improve storage latency by reducing IO path dependency on the network. We'll introduc

- 11 participants

- 46 minutes

28 Apr 2016

The Ceph upstream community is declaring CephFS stable for the first time in the recent Jewel release, but that declaration comes with caveats: while we have filesystem repair tools and a horizontally scalable POSIX filesystem, we have default-disabled exciting features like horizontally-scalable metadata servers and snapshots. This talk will present exactly what features you can expect to see, what's blocking the inclusion of other features, and what you as a user can expect and can contribute

- 3 participants

- 37 minutes

27 Apr 2016

Ceph is a popular storage backend for OpenStack deployments, in use for block devices via RBD/Cinder and for object storage with RGW. Ceph also includes a filesystem, CephFS which is suitable for integration with Manila, the OpenStack shared filesystem service. This presentation introduces the new CephFS Native driver for Manila, describing its the implementation of the driver, and how to deploy and use it. Areas of particular interest for Ceph users will include how CephFS snapshots map to Man

- 6 participants

- 38 minutes

5 Nov 2014

#vBrownBag 2014 OpenStack Paris - Boyan Krosnov – Block Storage: When Ceph is not enough

- 1 participant

- 7 minutes

5 Sep 2014

This video shows how I built my Openstack Icehouse test lab under ESXi 5.1 and the way it works connected to a Ceph cluster for storing Glance images and Cinder block devices.

All the machines are running CentOS seven, which is not fully supported at the moment

All the machines are running CentOS seven, which is not fully supported at the moment

- 1 participant

- 37 minutes

19 May 2014

By Ian Colle

As the size and performance requirements of cloud deployments increases, storage architects are increasingly seeking new architectures that scale.

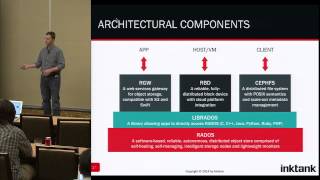

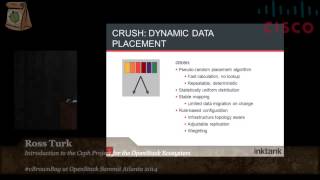

Ceph is a fully open source distributed object store, network block device, and file system designed for reliability, performance, and scalability from terabytes to exabytes. Ceph utilizes a novel placement algorithm (CRUSH), active storage nodes, and peer-to-peer gossip protocols to avoid the scalability and reliability problems associated with centralized controllers and lookup tables.

Ceph's architecture is based on RADOS, an object store with support for snapshots and distributed computation. Ceph offers a Swift and S3-compatible REST API for seamless data access to RADOS. Ceph's network block device can be used to store large images and volumes in RADOS, supporting thin provisioning and snapshots. Ceph has native support for Qemu/KVM, libvirt, CloudStack and OpenStack which makes it an attractive storage option for cloud deployments.

As a result of participating in this session, attendees will gain a full understanding of the Ceph architecture, its current status, and plans for future development. Attendees will also learn how Ceph integrates with OpenStack to provide a unified object and block storage service.

Help us caption & translate this video!

http://amara.org/v/FG0G/

As the size and performance requirements of cloud deployments increases, storage architects are increasingly seeking new architectures that scale.

Ceph is a fully open source distributed object store, network block device, and file system designed for reliability, performance, and scalability from terabytes to exabytes. Ceph utilizes a novel placement algorithm (CRUSH), active storage nodes, and peer-to-peer gossip protocols to avoid the scalability and reliability problems associated with centralized controllers and lookup tables.

Ceph's architecture is based on RADOS, an object store with support for snapshots and distributed computation. Ceph offers a Swift and S3-compatible REST API for seamless data access to RADOS. Ceph's network block device can be used to store large images and volumes in RADOS, supporting thin provisioning and snapshots. Ceph has native support for Qemu/KVM, libvirt, CloudStack and OpenStack which makes it an attractive storage option for cloud deployments.

As a result of participating in this session, attendees will gain a full understanding of the Ceph architecture, its current status, and plans for future development. Attendees will also learn how Ceph integrates with OpenStack to provide a unified object and block storage service.

Help us caption & translate this video!

http://amara.org/v/FG0G/

- 1 participant

- 40 minutes

16 May 2014

Open Storage: Intel's Investments in Object Storage features Intel's Rob Armstrong, Tushar Gohad and Paul Luse.

Come hear about all the existing things Intel is doing in Swift and with Object Storage in general. The Juno release will introduce the notion of Storage Policies to Swift and laid the groundwork for a whole host of new usage models. Also with Juno we'll be introducing Erasure Codes as the first significant Storage Policy. We'll also talk about a new test/QA cluster that has been donated by SwiftStack, Intel, and HGST, which uses the latest drive and processor technology to provide automated tests and a public baseline for performance, a key aspect of the release process not previously available. We'll round this out with an update and demo on COSBench, the benchmarking tool, which now has more storage types like Ceph, S3 verified, and more test scenarios supported.

Come hear about all the existing things Intel is doing in Swift and with Object Storage in general. The Juno release will introduce the notion of Storage Policies to Swift and laid the groundwork for a whole host of new usage models. Also with Juno we'll be introducing Erasure Codes as the first significant Storage Policy. We'll also talk about a new test/QA cluster that has been donated by SwiftStack, Intel, and HGST, which uses the latest drive and processor technology to provide automated tests and a public baseline for performance, a key aspect of the release process not previously available. We'll round this out with an update and demo on COSBench, the benchmarking tool, which now has more storage types like Ceph, S3 verified, and more test scenarios supported.

- 3 participants

- 47 minutes

15 May 2014

There has been a lot of buzz surrounding Ceph and OpenStack lately, and a lot of new developments. This talk will provide a quick overview of Ceph's architecture and the integration between Ceph and OpenStack, examine recent use cases, and discuss what is coming up next. We'll take a look at how to keep fans of both platforms happy and discuss how the less-oft-used pieces of the Ceph platform can help augment your OpenStack setup. We'll also talk about how to get started and what the community can do to get involved.

- 1 participant

- 13 minutes

13 May 2014

The University of Alabama - Birmingham case study features John-Paul Robinson and Dell's Kamesh Pemmaraju.

The University of Alabama at Birmingham gives scientists and researchers a massive, on-demand, virtual storage cloud using OpenStack and Ceph for less than $0.41 per gigabyte. This session will detail how the university IT staff deployed a private storage cloud infrastructure using the Dell OpenStack cloud solution with Dell servers, storage, networking and OpenStack, and Inktank Ceph.

After assessing a number of traditional storage scenarios, the University partnered with Dell and Inktank to architect a centralized cloud storage platform that was capable of scaling seamlessly and rapidly, was cost-effective, and that could leverage a single hardware infrastructure for the OpenStack compute and storage environment.

The University of Alabama at Birmingham gives scientists and researchers a massive, on-demand, virtual storage cloud using OpenStack and Ceph for less than $0.41 per gigabyte. This session will detail how the university IT staff deployed a private storage cloud infrastructure using the Dell OpenStack cloud solution with Dell servers, storage, networking and OpenStack, and Inktank Ceph.

After assessing a number of traditional storage scenarios, the University partnered with Dell and Inktank to architect a centralized cloud storage platform that was capable of scaling seamlessly and rapidly, was cost-effective, and that could leverage a single hardware infrastructure for the OpenStack compute and storage environment.

- 4 participants

- 38 minutes

13 May 2014

Ceph Update: Erasure Coding and Tiering features Ross Turk from Inktank.

- 1 participant

- 13 minutes

11 Feb 2014

Unified Cloud Storage with Synnefo + Ganeti + Archipelago + Ceph

Speaker: Vangelis Koukis

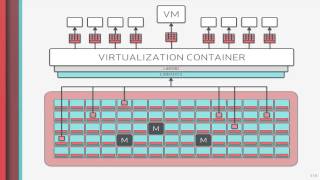

This talk presents Synnefo's evolution since FOSDEM '13, focusing on its integration with Ganeti, Archipelago, and Ceph to deliver a unified, scalable storage substrate for IaaS clouds.

The talk will begin with an overview of the Synnefo architecture (Python, Django, Ganeti, and Xen or KVM). Then, it will introduce Archipelago, a software-defined, distributed storage layer that decouples Volume and File operations/logic from the underlying storage technology, used to store the actual data. Archipelago provides a unified way to provision, handle and present Files, Images, Volumes and Snapshots independently of the storage backend. We will focus on using Ceph/RADOS as a backend for Archipelago.

With Archipelago and its integration with Synnefo and Ganeti one can: * maintain commonly-used data sets as snapshots and attach them as read-only disks or writeable clones in VMs running the application, * begin with a base OS image, bundle all application and supporting library code inside it, and upload it to the Archipelago backend in a syncing, Dropbox-like manner, * start a parallel HPC application in hundreds of VMs, thinly provisioned from this Image, * modify the I/O processing pipeline to enable aggressive client-side caching for improved number of IOPS, * create point-in-time snapshots of running VM disks, * share snapshots with other users, with fine-grained Access Control Lists, * and sync them back to their PC for further processing.

The talk will include a live demonstration of a workflow including this functionality in the context of a large-scale public cloud.

The intended audience spans from enterprise users comparing cloud platforms, to developers who wish to discover a different design approach to IaaS clouds and storage virtualization.

Please see the abstract above for a rough sketch of the proposed presentation. The presentation will mostly consist of live demonstration and a small deck of slides, meant to describe the main features of Archipelago and its integration with Synnefo, as well as provoke discussion in the Q&A session.

The main workflow in the demonstration will be [copied and pasted from the Abstract]: * begin with a base OS image, bundle all application and supporting library code inside it, and upload it to the Archipelago backend in a syncing, Dropbox-like manner, * start a parallel HPC application in hundreds of VMs, thinly provisioned from this Image, * modify the I/O processing pipeline to enable aggressive client-side caching for improved number of IOPS, * create point-in-time snapshots of running VM disks, * share snapshots with other users, with fine-grained Access Control Lists, * and sync them back to their PC for further processing

Speaker: Vangelis Koukis

This talk presents Synnefo's evolution since FOSDEM '13, focusing on its integration with Ganeti, Archipelago, and Ceph to deliver a unified, scalable storage substrate for IaaS clouds.

The talk will begin with an overview of the Synnefo architecture (Python, Django, Ganeti, and Xen or KVM). Then, it will introduce Archipelago, a software-defined, distributed storage layer that decouples Volume and File operations/logic from the underlying storage technology, used to store the actual data. Archipelago provides a unified way to provision, handle and present Files, Images, Volumes and Snapshots independently of the storage backend. We will focus on using Ceph/RADOS as a backend for Archipelago.

With Archipelago and its integration with Synnefo and Ganeti one can: * maintain commonly-used data sets as snapshots and attach them as read-only disks or writeable clones in VMs running the application, * begin with a base OS image, bundle all application and supporting library code inside it, and upload it to the Archipelago backend in a syncing, Dropbox-like manner, * start a parallel HPC application in hundreds of VMs, thinly provisioned from this Image, * modify the I/O processing pipeline to enable aggressive client-side caching for improved number of IOPS, * create point-in-time snapshots of running VM disks, * share snapshots with other users, with fine-grained Access Control Lists, * and sync them back to their PC for further processing.

The talk will include a live demonstration of a workflow including this functionality in the context of a large-scale public cloud.

The intended audience spans from enterprise users comparing cloud platforms, to developers who wish to discover a different design approach to IaaS clouds and storage virtualization.

Please see the abstract above for a rough sketch of the proposed presentation. The presentation will mostly consist of live demonstration and a small deck of slides, meant to describe the main features of Archipelago and its integration with Synnefo, as well as provoke discussion in the Q&A session.

The main workflow in the demonstration will be [copied and pasted from the Abstract]: * begin with a base OS image, bundle all application and supporting library code inside it, and upload it to the Archipelago backend in a syncing, Dropbox-like manner, * start a parallel HPC application in hundreds of VMs, thinly provisioned from this Image, * modify the I/O processing pipeline to enable aggressive client-side caching for improved number of IOPS, * create point-in-time snapshots of running VM disks, * share snapshots with other users, with fine-grained Access Control Lists, * and sync them back to their PC for further processing

- 4 participants

- 37 minutes

17 Jan 2014

Presenter(s): Sage Weil

URL: https://lca2014.linux.org.au/schedule/30079/view_talk

The Ceph distributed storage system sports object, block, and file interfaces to a single storage cluster. The core infrastructure supporting these use cases is a distributed object storage and compute platform called RADOS (reliable autonomic distributed object store). The low-level librados API exports a conceptually simple yet powerful interface for storing and processing large amounts of data and is well-suited for backing web-scale applications and data analytics. In features a rich object model (bytes, attributes, and sorted keys/values), efficient key/value storage (using leveldb on the backend), atomic transactions (including efficient compare-and-swap semantics), object cloning and other primitives for supporting snapshots, simple inter-client communication and coordination (ala Zookeeper), and the ability to extend the object interface using arbitrary code executed on the storage node as part of the IO pipeline. Librados classes are a plugin API that allows computation to be performed on the storage node, avoiding expensive read/modify/write cycles, while still leveraging the replication, atomicity, and high-availability provided by RADOS.

This talk will include a brief overview of the Ceph architecture, and then focus on librados, the API and how it is used, the security model, and some examples of RADOS classes implementing interesting functionality (like cls_lua, which embeds a LUA interpreter for arbitrary code execution).

http://lca2014.linux.org.au - http://www.linux.org.au

CC BY-SA - http://creativecommons.org/licenses/by-sa/4.0/legalcode.txt

URL: https://lca2014.linux.org.au/schedule/30079/view_talk

The Ceph distributed storage system sports object, block, and file interfaces to a single storage cluster. The core infrastructure supporting these use cases is a distributed object storage and compute platform called RADOS (reliable autonomic distributed object store). The low-level librados API exports a conceptually simple yet powerful interface for storing and processing large amounts of data and is well-suited for backing web-scale applications and data analytics. In features a rich object model (bytes, attributes, and sorted keys/values), efficient key/value storage (using leveldb on the backend), atomic transactions (including efficient compare-and-swap semantics), object cloning and other primitives for supporting snapshots, simple inter-client communication and coordination (ala Zookeeper), and the ability to extend the object interface using arbitrary code executed on the storage node as part of the IO pipeline. Librados classes are a plugin API that allows computation to be performed on the storage node, avoiding expensive read/modify/write cycles, while still leveraging the replication, atomicity, and high-availability provided by RADOS.

This talk will include a brief overview of the Ceph architecture, and then focus on librados, the API and how it is used, the security model, and some examples of RADOS classes implementing interesting functionality (like cls_lua, which embeds a LUA interpreter for arbitrary code execution).

http://lca2014.linux.org.au - http://www.linux.org.au

CC BY-SA - http://creativecommons.org/licenses/by-sa/4.0/legalcode.txt

- 3 participants

- 48 minutes

9 Nov 2013

For more than a year, Ceph has become increasingly popular and saw several deployments inside and outside OpenStack. The community and Ceph itself has greatly matured. Ceph is a fully open source distributed object store, network block device, and file system designed for reliability, performance, and scalability from terabytes to exabytes. Ceph utilizes a novel placement algorithm (CRUSH), active storage nodes, and peer-to-peer gossip protocols to avoid the scalability and reliability problems associated with centralized controllers and lookup tables. Since Grizzly, the Ceph integration gained some good additions: Havana definitely brought tons of awesome features. It also made the integration easier and definitely removed all the tiny hacks. All these things, will certainly encourage people to use Ceph in OpenStack. Ceph is excellent to back OpenStack platforms, no matter how big and complex the platform.. The main goal of the talk is to convince those of you who aren't already using Ceph as a storage backend for OpenStack to do so. I consider the Ceph technology to be the de facto storage backend for OpenStack for a lot of good reasons that I'll expose during the talk. In this session, Sebastien Han from eNovance will go through several subjects such as (depends on the time that I'll get): Quick Ceph overview (for those of you who are not familiar with it) Quick state of the integration with OpenStack (general state and Havana's best additions) Quick cinder drivers overview and comparisons Building a Ceph cluster - general considerations Use cases and design examples (hardware harware hardware) Achieve HA with Ceph Operations: backups, monitoring, upgrades Tips and best practices

- 1 participant

- 39 minutes

9 Nov 2013

With the rising of cloud computing and big data, more and more companies start to build their own cloud storage system. Swift and Ceph are two popular open source software stacks, which are well deployed in today's OpenStack based cloud environment to implement object and virtual block service. In this presentation, we do a deep study on both swift and Ceph performance on Commodity x86 platform, including testing environment, methodolgy and thorough analysis. Tuning guide and optimization BKM (best known method) will also be shared for reference.

- 2 participants

- 40 minutes

8 Nov 2013

Hear detail around a unique deployment of the Dell OpenStack-Powered Cloud Solution with Inktank Ceph installed at a large nationally recognized American University that specializes in cancer and genomic research. The University had a need to provide a scalable, secure, centralized data repository to support approximately 900 researchers and an ever-expanding number of research projects and rapidly expanding universe of data. The Dell and Inktank cloud storage solution addresses these storage challenges with an open source solution that leverages the Dell Crowbar Framework and Reference Architecture. After assessing a number of traditional storage scenarios, the University partnered with Dell and Inktank to architect a centralized cloud storage platform that is capable of scaling seamlessly and rapidly, is cost-effective, and that can leverage a single hardware infrastructure, with Dell Power Edge R-720XD servers and the Dell Reference Architecture for their OpenStack compute and storage environment.

- 1 participant

- 12 minutes

7 Nov 2013

Ceph is a massively scalable, open source, software defined storage system that runs on commodity hardware.  Integrated with OpenStack since the Folsom release, Ceph provides cost-effective block and object storage for the cloud.   This demonstration will showcase tools for enterprises who operate Ceph clusters, and will show how they can be used to detect issues, troubleshoot, and report on the health of the entire storage cluster.&nbsp. https://docs.openstack.org/openstack-ansible/latest/user/ceph/full-deploy.html

Speaker(s):

Connect with us:

OpenInfra Twitter: https://twitter.com/openinfradev

OpenInfra LinkedIn: https://www.linkedin.com/company/open-infrastructure-foundation/mycompany/

OpenInfra Facebook: https://www.facebook.com/openinfradev

OpenInfra Website: https://openinfra.dev/

#OpenStack #OpenInfra #OpenStackDemo #OpenSource

Speaker(s):

Connect with us:

OpenInfra Twitter: https://twitter.com/openinfradev

OpenInfra LinkedIn: https://www.linkedin.com/company/open-infrastructure-foundation/mycompany/

OpenInfra Facebook: https://www.facebook.com/openinfradev

OpenInfra Website: https://openinfra.dev/

#OpenStack #OpenInfra #OpenStackDemo #OpenSource

- 1 participant

- 12 minutes

7 Nov 2013

This presentation covers practical experience from building an OpenStack private cloud with a specific emphasis on high availability. Based on a production deployment of OpenStack Grizzly at Pixelpark (a hosting company based in Berlin, Germany), we explore and explain highly-available data storage with Ceph, OpenStack service high availability with Pacemaker, virtual machine high availability in OpenStack Compute, and high availability considerations for OpenStack Networking. We also outline the lessons a previously conventional hosting company has learned from moving to a private cloud deployment, both from a technical and organizational perspective. About the speakers: Sebastian Kachel is an all-around devops guy at Pixelpark, and has been involved in the OpenStack deployment project since its inception. Florian Haas has provided consulting, training and guidance to Pixelpark over the course of the project. https://www.openstack.org/

Speaker(s):

Sebastian Kachel

Florian Haas https://twitter.com/xahteiwi

Connect with us:

OpenInfra Twitter: https://twitter.com/openinfradev

OpenInfra LinkedIn: https://www.linkedin.com/company/open-infrastructure-foundation/mycompany/

OpenInfra Facebook: https://www.facebook.com/openinfradev

OpenInfra Website: https://openinfra.dev/

#OpenStack #OpenInfra #OpenSource #PrivateHybridCloud #OpenStackHongKongSummit2013

Speaker(s):

Sebastian Kachel

Florian Haas https://twitter.com/xahteiwi

Connect with us:

OpenInfra Twitter: https://twitter.com/openinfradev

OpenInfra LinkedIn: https://www.linkedin.com/company/open-infrastructure-foundation/mycompany/

OpenInfra Facebook: https://www.facebook.com/openinfradev

OpenInfra Website: https://openinfra.dev/

#OpenStack #OpenInfra #OpenSource #PrivateHybridCloud #OpenStackHongKongSummit2013

- 2 participants

- 29 minutes

10 Oct 2013

Abstract:

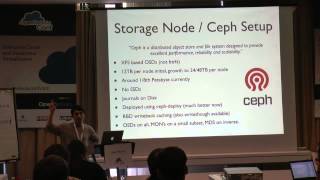

BBC Research & Development are in the process of deploying a department wide virtualization solution, catering for use cases including web development, machine learning, transcoding, media ingress and system testing. This talk discusses the implementation of a high performance Ceph storage backend and the challenges of virtualization in a broadcast research and development environment.

Bio:

Joel Merrick has been involved in system administration and engineering for well over a decade. He is the project lead for an internal VM platform for BBC Research and Development. First becoming involved in virtualisation more than 5 years ago, both professionally and working for the non-profit Sahana Foundation, whereby live production deployments of the software have been running under KVM 'in the field'.

BBC Research & Development are in the process of deploying a department wide virtualization solution, catering for use cases including web development, machine learning, transcoding, media ingress and system testing. This talk discusses the implementation of a high performance Ceph storage backend and the challenges of virtualization in a broadcast research and development environment.

Bio:

Joel Merrick has been involved in system administration and engineering for well over a decade. He is the project lead for an internal VM platform for BBC Research and Development. First becoming involved in virtualisation more than 5 years ago, both professionally and working for the non-profit Sahana Foundation, whereby live production deployments of the software have been running under KVM 'in the field'.

- 1 participant

- 29 minutes

27 Sep 2013

RBD, the RADOS Block Device in Ceph, gives you virtually unlimited scalability (without downtime), high performance, intelligent balancing and self-healing capabilities that traditional SANs can't provide. Ceph achieves this higher throughput through a unique system of placing objects across multiple nodes, and adaptive load balancing that replicates frequently accessed objects over more nodes. This talk will give a brief overview of the Ceph architecture, current integration with Apache CloudStack, and recent advancements with Xen and blktap2.

- 2 participants

- 29 minutes

23 Jul 2013

http://www.twitter.com/user/LinuxConference https://twitter.com/LinuxConference

- 7 participants

- 1:40 hours

3 May 2013

Speaker: Josh Durgin, Inktank

Ceph is an open source distributed object store, network block device, and file system. Ceph can be used for object storage through its S3 and Swift APIs. It can also provide storage for network block devices, with the thin provisioning and copy-on-write cloning features necessary to support large-scale virtualization.

Since the Folsom release, Cinder makes block storage for backing VMs a first class feature in OpenStack. Block devices can be created from images stored in Glance, and with Ceph's RBD behind both, new VMs can be created faster while using less space. In the latest Ceph 'Bobtail' release, you can start many VMs instantly by cloning from templates. Also, on the storage backend side, you will see increased I/O performance due to improved threading.

This session will cover an intro to Ceph, the current status of Ceph and Grizzly, the latest features of the Ceph Bobtail release and also the technical implications and the advantages of block storage within OpenStack.

For more summit videos, visit: http://www.openstack.org/summit/portland-2013/session-videos/

Ceph is an open source distributed object store, network block device, and file system. Ceph can be used for object storage through its S3 and Swift APIs. It can also provide storage for network block devices, with the thin provisioning and copy-on-write cloning features necessary to support large-scale virtualization.

Since the Folsom release, Cinder makes block storage for backing VMs a first class feature in OpenStack. Block devices can be created from images stored in Glance, and with Ceph's RBD behind both, new VMs can be created faster while using less space. In the latest Ceph 'Bobtail' release, you can start many VMs instantly by cloning from templates. Also, on the storage backend side, you will see increased I/O performance due to improved threading.

This session will cover an intro to Ceph, the current status of Ceph and Grizzly, the latest features of the Ceph Bobtail release and also the technical implications and the advantages of block storage within OpenStack.

For more summit videos, visit: http://www.openstack.org/summit/portland-2013/session-videos/

- 1 participant

- 40 minutes

23 Apr 2013

Speakers: Neil Levine (Inktank), Kamesh Pemmaraju (Dell)

Inktank Ceph is a transformational open source storage solution fully integrated into OpenStack providing scalable object and block storage (via Cinder) using commodity servers. The Ceph solution is resilient to failures, uses storage efficiently, and performs well under a variety of VM Workloads. Dell Crowbar is an open source software framework that can automatically deploy Ceph and OpenStack on bare metal servers in a matter of hours. The Ceph team worked with Dell to create a Ceph barclamp (a crowbar extention) that integrates Glance, Cinder, and Nova-Volume. As a result, it is lot faster and easier to install, configure, and manage a sizable OpenStack and Ceph cluster that is tightly integrated and cost- optimized.

Hear how OpenStack users can address their storage deployment challenges:

- Overview of the Ceph architecture with unique features and benefits

- Overview of Dell Crowbar and how it can automate and simplify Ceph/OpenStack deployments

- Best practices in deploying cloud storage with Ceph and OpenStack.

For more summit videos, visit: http://www.openstack.org/summit/portland-2013/session-videos/

Inktank Ceph is a transformational open source storage solution fully integrated into OpenStack providing scalable object and block storage (via Cinder) using commodity servers. The Ceph solution is resilient to failures, uses storage efficiently, and performs well under a variety of VM Workloads. Dell Crowbar is an open source software framework that can automatically deploy Ceph and OpenStack on bare metal servers in a matter of hours. The Ceph team worked with Dell to create a Ceph barclamp (a crowbar extention) that integrates Glance, Cinder, and Nova-Volume. As a result, it is lot faster and easier to install, configure, and manage a sizable OpenStack and Ceph cluster that is tightly integrated and cost- optimized.

Hear how OpenStack users can address their storage deployment challenges:

- Overview of the Ceph architecture with unique features and benefits

- Overview of Dell Crowbar and how it can automate and simplify Ceph/OpenStack deployments

- Best practices in deploying cloud storage with Ceph and OpenStack.

For more summit videos, visit: http://www.openstack.org/summit/portland-2013/session-videos/

- 2 participants

- 34 minutes

17 Apr 2013

Ross Turk, InkTank, at OpenStack Summit 2013 with John Furrier and Jeff Frick.

Ross Turk, Vice President of Community at Inktank, visited SiliconAngle’s theCube during the OpenStack Summit taking place in Portland this week to discuss Inktank’s open source project, Ceph, and the current community environment with John Furrier and Jeff Frick.

The Ceph Project has been in development since 2004, and is a commodity storage software layer that exposes the storage resources of the hardware that its sitting on top of. Furrier asked Turk what kind of traction Ceph is getting with OpenStack, and what sets it apart from other storage software.

The first reason is the lowest-cost-per-gig, Turk readily answered. Keeping storage costs down is key, especially when scale is becoming more and more of an issue, and, because of Ceph’s self-managing and self-healing capabilities, it is designed for just that. The other reason is Ceph’s focus on integration with cloud, especially with OpenStack.

In the Ceph community, there has been overwhelming growth in participation. While proceeding in an orderly fashion with everyone who wants to contribute to the project, Ceph’s community management team also has to focus on who will maintain the project’s quality and its architectural integrity, making governance a main challenge.

Turk also discussed what trends he’s seen in the open source community. Everyone who, in the early days, was saying “I’m an open source person,” is now saying “I’m a cloud person,” Turk describes, it’s an interesting movement. The open source and cloud communities are really intermingling. Additionally, it’s great to see that everyone has now realized the importance and value of the community. All kinds of companies that didn’t have community intelligence before, definitely have it now.

#theCUBE #Ceph #OpenStack #SiliconANGLE #IntTank

Ross Turk, Vice President of Community at Inktank, visited SiliconAngle’s theCube during the OpenStack Summit taking place in Portland this week to discuss Inktank’s open source project, Ceph, and the current community environment with John Furrier and Jeff Frick.

The Ceph Project has been in development since 2004, and is a commodity storage software layer that exposes the storage resources of the hardware that its sitting on top of. Furrier asked Turk what kind of traction Ceph is getting with OpenStack, and what sets it apart from other storage software.

The first reason is the lowest-cost-per-gig, Turk readily answered. Keeping storage costs down is key, especially when scale is becoming more and more of an issue, and, because of Ceph’s self-managing and self-healing capabilities, it is designed for just that. The other reason is Ceph’s focus on integration with cloud, especially with OpenStack.

In the Ceph community, there has been overwhelming growth in participation. While proceeding in an orderly fashion with everyone who wants to contribute to the project, Ceph’s community management team also has to focus on who will maintain the project’s quality and its architectural integrity, making governance a main challenge.

Turk also discussed what trends he’s seen in the open source community. Everyone who, in the early days, was saying “I’m an open source person,” is now saying “I’m a cloud person,” Turk describes, it’s an interesting movement. The open source and cloud communities are really intermingling. Additionally, it’s great to see that everyone has now realized the importance and value of the community. All kinds of companies that didn’t have community intelligence before, definitely have it now.

#theCUBE #Ceph #OpenStack #SiliconANGLE #IntTank

- 3 participants

- 19 minutes

17 Apr 2013

In this video from the Lustre User Group 2013 conference, Sage Weil from Inktank presents: An Intro to Ceph for HPC.

Learn more at:

https://www.gaveledge.com/SFS1301/agenda

and

http:/inktank.com

Learn more at:

https://www.gaveledge.com/SFS1301/agenda

and

http:/inktank.com

- 6 participants

- 23 minutes

7 Mar 2013

Sage Weil discusses the Ceph distributed storage system at the October 2011 meeting of the UNIX Users Association (UUASC) hosted at Media Temple in Culver City California.

Sage's abstract is below.

Since the presentation Inktank www.inktank.com was cofounded by Sage to handle professional services support of Ceph.

More inforamation on UUASC can be found at http://uuasc.org .

Abstract

As the size and performance requirements of storage systems have

increased, file system designers have looked to new architectures to

facilitate system scalability. Ceph is a fully open source distributed

object store, network block device, and file system designed for

reliability, performance, and scalability from terabytes to exabytes.

Ceph's architecture consists of two main components: an object storage

layer, and a distributed file system that is constructed on top of this

object store. The object store provides a generic, scalable storage

platform with support for snapshots and distributed computation. This

storage backend is used to provide a simple network block device (RBD)

with thin provisioning and snapshots, or an S3 or Swift compatible RESTful

object storage interface. It also forms the basis for a distributed file

system, managed by a distributed metadata server cluster, which similarly

provides advanced features like per-directory granularity snapshots, and a

recursive accounting feature that provides a convenient view of how much

data is stored beneath any directory in the system.

This talk will describe the Ceph architecture and then focus on the

current status and future of the project. This will include a discussion

of Ceph's relationship with btrfs, the file system and RBD clients in the

Linux kernel, RBD support for virtual block devices in Qemu/KVM and

libvirt, and current engineering challenges.

Sage Weil designed Ceph as part of his PhD research in Storage Systems at

the University of California, Santa Cruz. Since graduating, he has

continued to refine the system with the goal of providing a stable next

generation distributed file system for Linux.

Sage's abstract is below.

Since the presentation Inktank www.inktank.com was cofounded by Sage to handle professional services support of Ceph.

More inforamation on UUASC can be found at http://uuasc.org .

Abstract

As the size and performance requirements of storage systems have

increased, file system designers have looked to new architectures to

facilitate system scalability. Ceph is a fully open source distributed

object store, network block device, and file system designed for

reliability, performance, and scalability from terabytes to exabytes.

Ceph's architecture consists of two main components: an object storage

layer, and a distributed file system that is constructed on top of this

object store. The object store provides a generic, scalable storage

platform with support for snapshots and distributed computation. This

storage backend is used to provide a simple network block device (RBD)

with thin provisioning and snapshots, or an S3 or Swift compatible RESTful

object storage interface. It also forms the basis for a distributed file

system, managed by a distributed metadata server cluster, which similarly

provides advanced features like per-directory granularity snapshots, and a

recursive accounting feature that provides a convenient view of how much

data is stored beneath any directory in the system.

This talk will describe the Ceph architecture and then focus on the

current status and future of the project. This will include a discussion

of Ceph's relationship with btrfs, the file system and RBD clients in the

Linux kernel, RBD support for virtual block devices in Qemu/KVM and

libvirt, and current engineering challenges.

Sage Weil designed Ceph as part of his PhD research in Storage Systems at

the University of California, Santa Cruz. Since graduating, he has

continued to refine the system with the goal of providing a stable next

generation distributed file system for Linux.

- 8 participants

- 1:33 hours

13 Dec 2012

Ceph creator Sage Weil speaking at the Storage Developer Conference in Santa Clara in Sep 2012.

- 1 participant

- 49 minutes

31 Jul 2012

The quickest way to get a proprietary storage vendor to change the subject is to ask them what they think of Ceph. What makes them so uncomfortable? Ceph provides object storage, block storage, and a distributed file system -- in a single storage platform -- for free. Ceph runs on commodity hardware, allowing you to power your storage with the best technology available.

Ceph's powerful distributed block device allows you to scale cloud platforms without limitation, and its object store is compatible with applications written for S3 or Swift. Ceph has been designed with no single point of failure and intelligent nodes that are self-managing and self-healing. It's time to throw away all the old rules and start a new era of free storage solutions.

You can pay for expensive and limited proprietary products. Or you can use Ceph and invest that money in your business instead.

Ross Turk

Inktank

Ross Turk is responsible for building a strategic relationship with users, contributors, and the open source community. Ross brings more than 15 years of experience creating software, managing complex IT systems, and helping companies understand and serve developers. Before joining Inktank, Ross managed developer communities for Talend, Alcatel-Lucent and SourceForge.net, the world's largest open source community. In the more distant past, Ross ran the engineering team for SourceForge and provided architectural leadership.

Ceph's powerful distributed block device allows you to scale cloud platforms without limitation, and its object store is compatible with applications written for S3 or Swift. Ceph has been designed with no single point of failure and intelligent nodes that are self-managing and self-healing. It's time to throw away all the old rules and start a new era of free storage solutions.

You can pay for expensive and limited proprietary products. Or you can use Ceph and invest that money in your business instead.

Ross Turk

Inktank

Ross Turk is responsible for building a strategic relationship with users, contributors, and the open source community. Ross brings more than 15 years of experience creating software, managing complex IT systems, and helping companies understand and serve developers. Before joining Inktank, Ross managed developer communities for Talend, Alcatel-Lucent and SourceForge.net, the world's largest open source community. In the more distant past, Ross ran the engineering team for SourceForge and provided architectural leadership.

- 1 participant

- 6 minutes

10 Jul 2012

"Scaling Storage with Ceph", Ross Turk, VP of Community, Inktank

Ceph is an open source distributed object store, network block device, and file system designed for reliability, performance, and scalability. It runs on commodity hardware, has no single point of failure, and is supported by the Linux kernel. This talk will describe the Ceph architecture, share its design principles, and discuss how it can be part of a cost-effective, reliable cloud stack.

Ceph is an open source distributed object store, network block device, and file system designed for reliability, performance, and scalability. It runs on commodity hardware, has no single point of failure, and is supported by the Linux kernel. This talk will describe the Ceph architecture, share its design principles, and discuss how it can be part of a cost-effective, reliable cloud stack.

- 2 participants

- 49 minutes