13 Sep 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Pegasus Workflow Management System

Speaker:

Karan Vahi, Information Sciences Institute, University of Southern California

Abstract:

Workflows are a key technology for enabling complex scientific computations. They capture the interdependencies between processing steps in data analysis and simulation pipelines as well as the mechanisms to execute those steps reliably and efficiently. Workflows can capture complex processes to promote sharing and reuse, and also provide provenance information necessary for the verification of scientific results and scientific reproducibility. Pegasus (https://pegasus.isi.edu) is being used in a number of scientific domains doing production grade science. In 2016 the LIGO gravitational wave experiment used Pegasus to analyze instrumental data and confirm the first detection of a gravitational wave. The Southern California Earthquake Center (SCEC) based at USC, uses a Pegasus managed workflow infrastructure called Cybershake to generate hazard maps for the Southern California region. In 2021, SCEC conducted a CyberShake study on DOE systems Summit that used a simulation-based ERF for the first time. Overall, the study required 65,470 node-hours (358,000 GPU-hours and 243,000 CPU-hours ) of computation with Pegasus submitting tens of thousands of remote jobs automatically, and managed 165 TB of data over the 29-day study. Pegasus is also being used in astronomy, bioinformatics, civil engineering, climate modeling, earthquake science, molecular dynamics and other complex analyses. Pegasus users express their workflows using an abstract representation devoid of resource- specific information. Pegasus plans these abstract workflows by mapping tasks to available resources, augmenting the workflow with data management tasks, and optimizing the workflow by grouping small tasks into more efficient clustered batch jobs. Pegasus then executes this plan. If an error occurs at runtime, Pegasus automatically retries the failed task and provides checkpointing in case the workflow cannot continue. Pegasus can record provenance about the data, software and hardware used. Pegasus has a foundation for managing workflows in different environments, using workflow engines that are customized for a particular workload and system. Pegasus has a well defined support for major container technologies such as Docker, Singulartiy, Shifter that allows users to have the jobs in their workflow use containers of their choice. Pegasus most recent major release Pegasus 5.0 is a major improvement over previous releases. Pegasus 5.0 provides a brand new Python3 workflow API developed from the ground up so that, in addition to generating the abstract workflow and all the catalogs, it now allows you to plan, submit, monitor, analyze and generate statistics of your workflow.

Bio:

Karan Vahi is a Senior Computer Scientist in the Science Automation Technologies group at the USC Information Sciences Institute. He has been working in the field of scientific workflows since 2002, and has been closely involved in the development of the Pegasus Workflow Management System. He is currently the architect/lead developer for Pegasus and in charge of the core development of Pegasus. His work on implementing integrity checking in Pegasus for scientific workflows won the best paper and the Phil Andrews Most Transformative Research Award at PEARC19. He currently leads the Cloud Platforms group at CI Compass, a NSF CI Center, which includes CI practitioners from various NFS Major facilities(MF’s) and aims to understand the current practices for Cloud Infrastructure used by MFs and research alternative solutions. https://www.isi.edu/directory/vahi/

Host of Seminar:

Hai Ah Nam, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Pegasus Workflow Management System

Speaker:

Karan Vahi, Information Sciences Institute, University of Southern California

Abstract:

Workflows are a key technology for enabling complex scientific computations. They capture the interdependencies between processing steps in data analysis and simulation pipelines as well as the mechanisms to execute those steps reliably and efficiently. Workflows can capture complex processes to promote sharing and reuse, and also provide provenance information necessary for the verification of scientific results and scientific reproducibility. Pegasus (https://pegasus.isi.edu) is being used in a number of scientific domains doing production grade science. In 2016 the LIGO gravitational wave experiment used Pegasus to analyze instrumental data and confirm the first detection of a gravitational wave. The Southern California Earthquake Center (SCEC) based at USC, uses a Pegasus managed workflow infrastructure called Cybershake to generate hazard maps for the Southern California region. In 2021, SCEC conducted a CyberShake study on DOE systems Summit that used a simulation-based ERF for the first time. Overall, the study required 65,470 node-hours (358,000 GPU-hours and 243,000 CPU-hours ) of computation with Pegasus submitting tens of thousands of remote jobs automatically, and managed 165 TB of data over the 29-day study. Pegasus is also being used in astronomy, bioinformatics, civil engineering, climate modeling, earthquake science, molecular dynamics and other complex analyses. Pegasus users express their workflows using an abstract representation devoid of resource- specific information. Pegasus plans these abstract workflows by mapping tasks to available resources, augmenting the workflow with data management tasks, and optimizing the workflow by grouping small tasks into more efficient clustered batch jobs. Pegasus then executes this plan. If an error occurs at runtime, Pegasus automatically retries the failed task and provides checkpointing in case the workflow cannot continue. Pegasus can record provenance about the data, software and hardware used. Pegasus has a foundation for managing workflows in different environments, using workflow engines that are customized for a particular workload and system. Pegasus has a well defined support for major container technologies such as Docker, Singulartiy, Shifter that allows users to have the jobs in their workflow use containers of their choice. Pegasus most recent major release Pegasus 5.0 is a major improvement over previous releases. Pegasus 5.0 provides a brand new Python3 workflow API developed from the ground up so that, in addition to generating the abstract workflow and all the catalogs, it now allows you to plan, submit, monitor, analyze and generate statistics of your workflow.

Bio:

Karan Vahi is a Senior Computer Scientist in the Science Automation Technologies group at the USC Information Sciences Institute. He has been working in the field of scientific workflows since 2002, and has been closely involved in the development of the Pegasus Workflow Management System. He is currently the architect/lead developer for Pegasus and in charge of the core development of Pegasus. His work on implementing integrity checking in Pegasus for scientific workflows won the best paper and the Phil Andrews Most Transformative Research Award at PEARC19. He currently leads the Cloud Platforms group at CI Compass, a NSF CI Center, which includes CI practitioners from various NFS Major facilities(MF’s) and aims to understand the current practices for Cloud Infrastructure used by MFs and research alternative solutions. https://www.isi.edu/directory/vahi/

Host of Seminar:

Hai Ah Nam, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 6 participants

- 58 minutes

9 Aug 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Transparent Checkpointing: a mature technology enabling MANA for MPI and beyond

Speaker:

Gene Cooperman, Khoury College of Computer Sciences, Northeastern University

Abstract:

Although transparent checking grew up in the 1990s and 2000s as a technology for HPC, it has now grown as a tool that is useful for many newer domains. Today, this is no longer your grandfather's checkpointing software! In this talk, I will review some of the newer checkpointing technologies invented only in the last decade, and how they gate new capabilities that can be adapted in a variety of domains.

This talk includes a tour of the 15-year old DMTCP project, with special emphasis on the latest achievement: MANA for MPI -- a robust package for transparent checkpointing of MPI. But as a prerequisite, one must have an understanding of two advances that brought DMTCP to its present state: (i) a general framework for extensible checkpointing plugins; and (ii) split processes (isolate the software application to be checkpointed from the underlying hardware).

In the remainder of the talk, these two principles are first showcased in MANA. This is then followed by a selection of other domains where transparent checkpointing shows interesting potential. This includes: deep learning (especially for general frameworks), edge computing, lambda functions (serverless computing), spot instances, containers for parallel and distributed computing (Apptainer and Singularity), process migration (migrate the process to the data in joint work with JPL), deep debugging for parallel and distributed computations, a model for checkpointing in Hadoop, and more.

Bio:

Professor Cooperman currently works in high-performance computing. He received his B.S. from the University of Michigan in 1974, and his Ph.D. from Brown University in 1978. He came to Northeastern University in 1986, and has been a full professor there since 1992. His visiting research positions include a 5-year IDEX Chair of Attractivity at the University of Toulouse/CNRS in France, and sabbaticals at Concordia University, at CERN, and in Inria/France. In 2014, he and his student, Xin Dong, used a novel idea to semi-automatically add multi-threading support to the million-line Geant4 code coordinated out of CERN. He is one of the more than 100 co-authors on the foundational Geant4 paper, whose current citation count is 34,000. Prof. Cooperman currently leads the DMTCP project (Distributed Multi-Threaded CheckPointing) for transparent checkpointing. The project began in 2004, and has benefited from a series of PhD theses. Over 150 refereed publications cite DMTCP as having contributed to their research project.

Host of Seminar:

Zhengji Zhao, User Engagement Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Transparent Checkpointing: a mature technology enabling MANA for MPI and beyond

Speaker:

Gene Cooperman, Khoury College of Computer Sciences, Northeastern University

Abstract:

Although transparent checking grew up in the 1990s and 2000s as a technology for HPC, it has now grown as a tool that is useful for many newer domains. Today, this is no longer your grandfather's checkpointing software! In this talk, I will review some of the newer checkpointing technologies invented only in the last decade, and how they gate new capabilities that can be adapted in a variety of domains.

This talk includes a tour of the 15-year old DMTCP project, with special emphasis on the latest achievement: MANA for MPI -- a robust package for transparent checkpointing of MPI. But as a prerequisite, one must have an understanding of two advances that brought DMTCP to its present state: (i) a general framework for extensible checkpointing plugins; and (ii) split processes (isolate the software application to be checkpointed from the underlying hardware).

In the remainder of the talk, these two principles are first showcased in MANA. This is then followed by a selection of other domains where transparent checkpointing shows interesting potential. This includes: deep learning (especially for general frameworks), edge computing, lambda functions (serverless computing), spot instances, containers for parallel and distributed computing (Apptainer and Singularity), process migration (migrate the process to the data in joint work with JPL), deep debugging for parallel and distributed computations, a model for checkpointing in Hadoop, and more.

Bio:

Professor Cooperman currently works in high-performance computing. He received his B.S. from the University of Michigan in 1974, and his Ph.D. from Brown University in 1978. He came to Northeastern University in 1986, and has been a full professor there since 1992. His visiting research positions include a 5-year IDEX Chair of Attractivity at the University of Toulouse/CNRS in France, and sabbaticals at Concordia University, at CERN, and in Inria/France. In 2014, he and his student, Xin Dong, used a novel idea to semi-automatically add multi-threading support to the million-line Geant4 code coordinated out of CERN. He is one of the more than 100 co-authors on the foundational Geant4 paper, whose current citation count is 34,000. Prof. Cooperman currently leads the DMTCP project (Distributed Multi-Threaded CheckPointing) for transparent checkpointing. The project began in 2004, and has benefited from a series of PhD theses. Over 150 refereed publications cite DMTCP as having contributed to their research project.

Host of Seminar:

Zhengji Zhao, User Engagement Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 3 participants

- 58 minutes

28 Jun 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

FourCastNet: Data-driven, high-resolution atmosphere modeling at scale

Speaker:

Shashank Subramanian, Data & Analytics Services Group, National Energy Research Scientific Computing Center (NERSC), Lawrence Berkeley National Laboratory

Abstract:

We present FourCastNet, short for Fourier Forecasting Neural Network, a global data-driven weather forecasting model that provides accurate short to medium-range global predictions at 25km resolution. FourCastNet accurately forecasts high-resolution, fast-timescale variables such as the surface wind speed, total precipitation, and atmospheric water vapor with important implications for wind energy resource planning, predicting extreme weather events such as tropical cyclones and atmospheric rivers, as well as extreme precipitation. We compare the forecast skill of FourCastNet with archived operational IFS model forecasts and find that the forecast skill of our purely data-driven model is remarkably close to that of the IFS model for short to medium-range forecasts. FourCastNet generates a week-long forecast in less than 2 seconds, orders of magnitude faster than IFS, enabling the creation of inexpensive large-ensemble forecasts for improved probabilistic forecasting. Finally, our implementation is optimized and we present efficient scaling results on different supercomputing systems up to 3808 NVIDIA A100 GPUs, resulting in 80000 times faster time-to-solution relative to IFS, in inference.

Bio:

Shashank Subramanian is a NESAP for learning postdoctoral fellow with research interests in the intersection of high-performance scientific computing, deep learning, and physical sciences.

Host of Seminar:

Peter Harrington, Data & Analytics Services Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

FourCastNet: Data-driven, high-resolution atmosphere modeling at scale

Speaker:

Shashank Subramanian, Data & Analytics Services Group, National Energy Research Scientific Computing Center (NERSC), Lawrence Berkeley National Laboratory

Abstract:

We present FourCastNet, short for Fourier Forecasting Neural Network, a global data-driven weather forecasting model that provides accurate short to medium-range global predictions at 25km resolution. FourCastNet accurately forecasts high-resolution, fast-timescale variables such as the surface wind speed, total precipitation, and atmospheric water vapor with important implications for wind energy resource planning, predicting extreme weather events such as tropical cyclones and atmospheric rivers, as well as extreme precipitation. We compare the forecast skill of FourCastNet with archived operational IFS model forecasts and find that the forecast skill of our purely data-driven model is remarkably close to that of the IFS model for short to medium-range forecasts. FourCastNet generates a week-long forecast in less than 2 seconds, orders of magnitude faster than IFS, enabling the creation of inexpensive large-ensemble forecasts for improved probabilistic forecasting. Finally, our implementation is optimized and we present efficient scaling results on different supercomputing systems up to 3808 NVIDIA A100 GPUs, resulting in 80000 times faster time-to-solution relative to IFS, in inference.

Bio:

Shashank Subramanian is a NESAP for learning postdoctoral fellow with research interests in the intersection of high-performance scientific computing, deep learning, and physical sciences.

Host of Seminar:

Peter Harrington, Data & Analytics Services Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 3 participants

- 59 minutes

23 Jun 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Demo and hands-on session on ReFrame

Speaker:

Lisa Gerhardt, Alberto Chiusole - NERSC, Berkeley Lab

Abstract:

Overview and brief demo of the capabilities of ReFrame, and how we use it at NERSC to run pipelines on different systems and continuously test the user-facing requirements.

Title:

Demo and hands-on session on ReFrame

Speaker:

Lisa Gerhardt, Alberto Chiusole - NERSC, Berkeley Lab

Abstract:

Overview and brief demo of the capabilities of ReFrame, and how we use it at NERSC to run pipelines on different systems and continuously test the user-facing requirements.

- 5 participants

- 41 minutes

14 Jun 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Artificial Design of Porous Materials

Speaker:

Jihan Kim, Department of Chemical and Biomolecular Engineering, KAIST

Abstract:

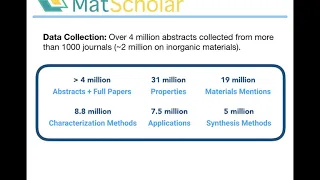

In this presentation, I will explore the new trend of designing novel porous materials using artificial design principles. I will talk about using our in-house developed generative adversarial network (GAN) software to create (for the first time) porous materials. Moreover, we have successfully implemented inverse-design in our GAN prompting ways to train our AI to create porous materials with user-desired methane adsorption capacity [1]. Next, we incorporate machine learning with genetic algorithm to design optimal metal-organic frameworks suitable for many different applications including methane storage and gas separations [2-3]. Finally, we demonstrate usage of text mining to collect wealth of data from published papers to predict optimal synthesis conditions for porous materials [4]. Overall, machine learning and artificial design can accelerate the materials discovery and expedite the process to deploy new materials for many different applications.

Bio:

Jihan Kim is an associate professor at KAIST (Korea Advanced Institute of Science and Technology). He received his B.S. degree in Electrical Engineering and Computer Science (EECS) at UC Berkeley in 1997 and received his M.S. and Ph.D. degrees in Electrical and Computer Engineering at University of Illinois at Urbana-Champaign in 2004 and 2009, respectively. He worked as a NERSC postdoc in the Petascale Post-doc project from 2009 to 2011 and worked as postdoctoral researcher in UC Berkeley/LBNL with Prof. Berend Smit from 2011 to 2013. His current research at KAIST focuses on using molecular simulations and machine learning methods to design novel porous materials (e.g. zeolites, MOFs, porous polymers) for various energy and environmental related applications (e.g. gas storage, gas separations, catalysis, sensors). He has published over 100 papers and has over 7000 Google Scholar citations.

Host of Seminar:

Brian Austin, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Artificial Design of Porous Materials

Speaker:

Jihan Kim, Department of Chemical and Biomolecular Engineering, KAIST

Abstract:

In this presentation, I will explore the new trend of designing novel porous materials using artificial design principles. I will talk about using our in-house developed generative adversarial network (GAN) software to create (for the first time) porous materials. Moreover, we have successfully implemented inverse-design in our GAN prompting ways to train our AI to create porous materials with user-desired methane adsorption capacity [1]. Next, we incorporate machine learning with genetic algorithm to design optimal metal-organic frameworks suitable for many different applications including methane storage and gas separations [2-3]. Finally, we demonstrate usage of text mining to collect wealth of data from published papers to predict optimal synthesis conditions for porous materials [4]. Overall, machine learning and artificial design can accelerate the materials discovery and expedite the process to deploy new materials for many different applications.

Bio:

Jihan Kim is an associate professor at KAIST (Korea Advanced Institute of Science and Technology). He received his B.S. degree in Electrical Engineering and Computer Science (EECS) at UC Berkeley in 1997 and received his M.S. and Ph.D. degrees in Electrical and Computer Engineering at University of Illinois at Urbana-Champaign in 2004 and 2009, respectively. He worked as a NERSC postdoc in the Petascale Post-doc project from 2009 to 2011 and worked as postdoctoral researcher in UC Berkeley/LBNL with Prof. Berend Smit from 2011 to 2013. His current research at KAIST focuses on using molecular simulations and machine learning methods to design novel porous materials (e.g. zeolites, MOFs, porous polymers) for various energy and environmental related applications (e.g. gas storage, gas separations, catalysis, sensors). He has published over 100 papers and has over 7000 Google Scholar citations.

Host of Seminar:

Brian Austin, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 2 participants

- 49 minutes

7 Jun 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Building a Platform for Operating Multi-Institutional Distributed Services

Speaker:

Lincoln Bryant, Research Engineer at the University of Chicago's Enrico Fermi Institute

Abstract:

Much of science today is propelled by research collaborations that require highly interconnected instrumentation, computational, and storage resources that cross institutional boundaries. To provide a generalized service infrastructure for multi-institutional science, we propose a new abstraction and implementation of this model: Federated Operations (FedOps) and SLATE. We will show the general principles behind the FedOps trust model and how the SLATE platform implements FedOps for building a service fabric over independently operated Kubernetes clusters. Finally, we will show how SLATE is being used to manage data and software caching networks in production across computing sites in the US ATLAS computing facility in support the ATLAS experiment at the CERN Large Hadron Collider.

Bio:

Lincoln Bryant is a Research Engineer in the Enrico Fermi Institute at the University of Chicago. He has over a decade of experience building and supporting High-Throughput Computing (HTC), distributed storage, and containerization/virtualization systems for both the ATLAS experiment at the Large Hadron Collider and other collaborations as part of the Open Science Grid Consortium. Lincoln is one of the primary contributors to the Services Layer At The Edge (SLATE) project and has been an active Kubernetes user since 2017.

Host of Seminar:

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Building a Platform for Operating Multi-Institutional Distributed Services

Speaker:

Lincoln Bryant, Research Engineer at the University of Chicago's Enrico Fermi Institute

Abstract:

Much of science today is propelled by research collaborations that require highly interconnected instrumentation, computational, and storage resources that cross institutional boundaries. To provide a generalized service infrastructure for multi-institutional science, we propose a new abstraction and implementation of this model: Federated Operations (FedOps) and SLATE. We will show the general principles behind the FedOps trust model and how the SLATE platform implements FedOps for building a service fabric over independently operated Kubernetes clusters. Finally, we will show how SLATE is being used to manage data and software caching networks in production across computing sites in the US ATLAS computing facility in support the ATLAS experiment at the CERN Large Hadron Collider.

Bio:

Lincoln Bryant is a Research Engineer in the Enrico Fermi Institute at the University of Chicago. He has over a decade of experience building and supporting High-Throughput Computing (HTC), distributed storage, and containerization/virtualization systems for both the ATLAS experiment at the Large Hadron Collider and other collaborations as part of the Open Science Grid Consortium. Lincoln is one of the primary contributors to the Services Layer At The Edge (SLATE) project and has been an active Kubernetes user since 2017.

Host of Seminar:

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 6 participants

- 53 minutes

17 May 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Memory Disaggregation: Potentials and Pitfalls

Speaker:

Nan Ding, Performance and Algorithms Group, Computer Science Department, Lawrence Berkeley National Laboratory

Abstract:

Memory usage imbalance has been consistently observed in many data centers. This has sparked interest in memory disaggregation, which allows applications to use all available memory across an entire data center instead of being confined to the memory of a single server. In the talk, I'll present the design space and implementation for building a disaggregated memory system. I'll then discuss the critical metrics for applications to benefit from memory disaggregation.

Bio:

Nan Ding is a Research Scientist in the Performance and Algorithms group of the Computer Science Department at Lawrence Berkeley National Laboratory. Her research interests include high-performance computing, performance modeling, and auto-tuning. Nan received her Ph.D. in computer science from Tsinghua University, Beijing, China in 2018.

Host of Seminar:

Hai Ah Nam, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Memory Disaggregation: Potentials and Pitfalls

Speaker:

Nan Ding, Performance and Algorithms Group, Computer Science Department, Lawrence Berkeley National Laboratory

Abstract:

Memory usage imbalance has been consistently observed in many data centers. This has sparked interest in memory disaggregation, which allows applications to use all available memory across an entire data center instead of being confined to the memory of a single server. In the talk, I'll present the design space and implementation for building a disaggregated memory system. I'll then discuss the critical metrics for applications to benefit from memory disaggregation.

Bio:

Nan Ding is a Research Scientist in the Performance and Algorithms group of the Computer Science Department at Lawrence Berkeley National Laboratory. Her research interests include high-performance computing, performance modeling, and auto-tuning. Nan received her Ph.D. in computer science from Tsinghua University, Beijing, China in 2018.

Host of Seminar:

Hai Ah Nam, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 2 participants

- 39 minutes

26 Apr 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

FirecREST, RESTful HPC

Speaker:

Juan Pablo Dorsch, HPC Software Engineer & Lead for the Innovative Resource Access Methods, CSCS Swiss National Supercomputing Center

Abstract:

FirecREST is a RESTful API to HPC that empowers scientific communities to access compute and data HPC services and infrastructure through a web interface. This API supports and enhances the development of scientific portals that allow web developers and HPC users to adapt their workflows in a more flexible, secure, automated, and standardized way. In this talk, we will present FirecREST and provide an introduction to its capabilities.

Bio:

Juan Pablo Dorsch is a software engineer and lead for the Innovative Resource Access Methods at the CSCS Swiss National Supercomputing Centre. His areas of expertise include microservice architecture design, IAM, web development and RESTful services. Before joining CSCS, Juan held the position of HPC engineer with the Computational Methods Research Centre (CIMEC), and the position of scientific software developer with the International Centre for Numerical Methods in Engineering (CIMNE). He was also previously a degree professor at the National University (UNL) of Littoral in Santa Fe, Argentina. He holds a degree in Informatics Engineering with an emphasis on scientific applications from UNL.

Host of Seminar:

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

FirecREST, RESTful HPC

Speaker:

Juan Pablo Dorsch, HPC Software Engineer & Lead for the Innovative Resource Access Methods, CSCS Swiss National Supercomputing Center

Abstract:

FirecREST is a RESTful API to HPC that empowers scientific communities to access compute and data HPC services and infrastructure through a web interface. This API supports and enhances the development of scientific portals that allow web developers and HPC users to adapt their workflows in a more flexible, secure, automated, and standardized way. In this talk, we will present FirecREST and provide an introduction to its capabilities.

Bio:

Juan Pablo Dorsch is a software engineer and lead for the Innovative Resource Access Methods at the CSCS Swiss National Supercomputing Centre. His areas of expertise include microservice architecture design, IAM, web development and RESTful services. Before joining CSCS, Juan held the position of HPC engineer with the Computational Methods Research Centre (CIMEC), and the position of scientific software developer with the International Centre for Numerical Methods in Engineering (CIMNE). He was also previously a degree professor at the National University (UNL) of Littoral in Santa Fe, Argentina. He holds a degree in Informatics Engineering with an emphasis on scientific applications from UNL.

Host of Seminar:

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 2 participants

- 48 minutes

19 Apr 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Discovering and Modeling Strong Gravitational Lenses with Cori and Perlmutter at NERSC

Speakers:

Xiaosheng Huang, USF

Andi Gu, UCB

Abstract:

We have discovered over 1500 new strong lens candidates in the Dark Energy Spectroscopic Instrument (DESI) Legacy Imaging Surveys with residual neural networks using NERSC resources. Follow-up observations are underway. Our Hubble Space Telescope program has confirmed all 51 observed candides. DESI observations have confirmed more systems spectroscopically. Preliminary results from our latest search will increase the number of lens candidates to over 3000. We have also developed GIGA-Lens: a gradient-informed, GPU-accelerated Bayesian framework, implemented in TensorFlow and JAX. All components of this framework (optimization, variational inference, HMC) take advantage of gradient information through autodiff and parallelization on GPUs. Running on one Perlmutter A100 GPU node, we achieve 1-2 orders of magnitude speedup compared to existing codes. The robustness, speed, and scalability offered by this framework make it possible to model the large number of strong lenses found in DESI, and O(10^5) lenses expected to be discovered in upcoming large-scale surveys, such as the LSST.

Bios:

Xiaosheng Huang received his PhD from UC Berkeley and has been a faculty member in the Physics & Astronomy Department at the University of San Francisco since 2012. He works on problems in observational cosmology with collaborators in the Supernova Cosmology Project, the Nearby Supernova Factory, and the Dark Energy Spectroscopic Instrument experiment, and of course, with students.

Andi Gu is a current senior at UC Berkeley. He has been working in the Supernova Cosmology Project and DESI since 2019, applying his computer science and physics background to gravitational lens detection and modeling.

Host of Seminar:

Steven Farrell

Data & Analytics Services Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Discovering and Modeling Strong Gravitational Lenses with Cori and Perlmutter at NERSC

Speakers:

Xiaosheng Huang, USF

Andi Gu, UCB

Abstract:

We have discovered over 1500 new strong lens candidates in the Dark Energy Spectroscopic Instrument (DESI) Legacy Imaging Surveys with residual neural networks using NERSC resources. Follow-up observations are underway. Our Hubble Space Telescope program has confirmed all 51 observed candides. DESI observations have confirmed more systems spectroscopically. Preliminary results from our latest search will increase the number of lens candidates to over 3000. We have also developed GIGA-Lens: a gradient-informed, GPU-accelerated Bayesian framework, implemented in TensorFlow and JAX. All components of this framework (optimization, variational inference, HMC) take advantage of gradient information through autodiff and parallelization on GPUs. Running on one Perlmutter A100 GPU node, we achieve 1-2 orders of magnitude speedup compared to existing codes. The robustness, speed, and scalability offered by this framework make it possible to model the large number of strong lenses found in DESI, and O(10^5) lenses expected to be discovered in upcoming large-scale surveys, such as the LSST.

Bios:

Xiaosheng Huang received his PhD from UC Berkeley and has been a faculty member in the Physics & Astronomy Department at the University of San Francisco since 2012. He works on problems in observational cosmology with collaborators in the Supernova Cosmology Project, the Nearby Supernova Factory, and the Dark Energy Spectroscopic Instrument experiment, and of course, with students.

Andi Gu is a current senior at UC Berkeley. He has been working in the Supernova Cosmology Project and DESI since 2019, applying his computer science and physics background to gravitational lens detection and modeling.

Host of Seminar:

Steven Farrell

Data & Analytics Services Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 4 participants

- 53 minutes

22 Mar 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Composable Platforms for Scientific Computing: Experiences and Outcomes

Speakers:

Erik Gough, Brian Werts, Sam Weekly - Rosen Center for Advanced Computing, Purdue University

Abstract:

The Geddes Composable Platform is an on-premise Kubernetes-based private cloud hosted at Purdue University that’s designed to meet the increased demand for scientific data analysis and to promote "SciOps" — the application of DevOps principles in scientific computing. The platform has supported research groups and data science initiatives at Purdue, enabling as many as sixty users from a variety of scientific domains. In this seminar, we will give a technical overview of the platform and its components, summarize the usage patterns, and describe the scientific use cases the platform enables. Some examples of services deployed through Geddes include JupyterHubs, science gateways, databases, ML-based image classifiers, and web-based BLAST database searches. The same technology behind Geddes is found in Purdue’s new XSEDE resource named Anvil, which provides composable computing capabilities to the broader national research community.

Bios:

Erik Gough is a lead computational scientist in the Research Computing department at Purdue University. He has been building, maintaining and using large scale cyberinfrastructure for scientific computing at Purdue since 2007. Gough is a technical leader on multiple NSF funded projects, including an NSF CC* award to build the Geddes Composable Platform.

Brian Werts is the lead engineer for the design and implementation of Purdue’s Geddes Composable Platform and a HIPAA aligned Hadoop cluster for researchers that leverages Kubernetes to help facilitate reproducibility and scalability of data science workflows.

Host of Seminar:

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Composable Platforms for Scientific Computing: Experiences and Outcomes

Speakers:

Erik Gough, Brian Werts, Sam Weekly - Rosen Center for Advanced Computing, Purdue University

Abstract:

The Geddes Composable Platform is an on-premise Kubernetes-based private cloud hosted at Purdue University that’s designed to meet the increased demand for scientific data analysis and to promote "SciOps" — the application of DevOps principles in scientific computing. The platform has supported research groups and data science initiatives at Purdue, enabling as many as sixty users from a variety of scientific domains. In this seminar, we will give a technical overview of the platform and its components, summarize the usage patterns, and describe the scientific use cases the platform enables. Some examples of services deployed through Geddes include JupyterHubs, science gateways, databases, ML-based image classifiers, and web-based BLAST database searches. The same technology behind Geddes is found in Purdue’s new XSEDE resource named Anvil, which provides composable computing capabilities to the broader national research community.

Bios:

Erik Gough is a lead computational scientist in the Research Computing department at Purdue University. He has been building, maintaining and using large scale cyberinfrastructure for scientific computing at Purdue since 2007. Gough is a technical leader on multiple NSF funded projects, including an NSF CC* award to build the Geddes Composable Platform.

Brian Werts is the lead engineer for the design and implementation of Purdue’s Geddes Composable Platform and a HIPAA aligned Hadoop cluster for researchers that leverages Kubernetes to help facilitate reproducibility and scalability of data science workflows.

Host of Seminar:

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 4 participants

- 45 minutes

15 Mar 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Chameleon: An Innovation Platform for Computer Science Research and Education

Speakers:

Kate Keahey, Senior Scientist, CASE Affiliate

Abstract:

We live in interesting times: new ideas and technological opportunities emerge at ever increasing rate in disaggregated hardware, programmable networks, and the edge computing and IoT space to name just a few. These innovations require an instrument where they can be deployed and investigated, and where new solutions that those disruptive ideas require can be developed, tested, and shared. To support a breadth of Computer Science experiments such instrument has to provide access to a diversity of hardware configurations, support deployment at scale, as well as deep reconfigrability so that a wide range of experiments can be supported. It also has to provide mechanisms for easy and direct sharing of repeatable digital artifacts so that new experiments and results can be easily replicated and help enable further innovation. Most importantly -- since science does not stand still – such instrument requires the capability for constant adaptation to support an ever increasing range of experiments driven by emergent ideas and opportunities. The NSF-funded Chameleon testbed (www.chameleoncloud.org) has been developed to provide all those capabilities. It provides access to a variety of hardware including cutting-edge architectures, a range of accelerators, storage hierarchies with a mix of large RAM, NVDIMMs, a variety of enterprise and consumer grade SDDs, HDDs, high-bandwidth I/0 storage, SDN-enabled networking hardware, and fast interconnects. This diversity was enlarged recently to add support for edge computing/IoT devices and will be further extended this year to include LiQid composable hardware as well as P4 switches. Chameleon is distributed over two core sites at the University of Chicago and the Texas Advanced Computing Center (TACC) connected by 100 Gbps network – as well as three volunteer sites at NCAR, Northwestern University, and the University of Illinois in Chicago (UIC). Bare metal reconfigurability for Computer Science experiments is provided by CHameleon Infrastructure (CHI), based on an enhanced bare-metal flavor of OpenStack: it allows users to reconfigure resources at bare metal level, boot from custom kernel, and have root privileges on the machines. To date, the testbed has supported 6,000+ users and 800+ projects in research, education, and emergent applications. In this talk, I will describe the goals, the design strategy, and the capabilities of the testbed, as well as some of the research and education projects our users are working on. I will also discuss our new thrusts in support for research on edge computing and IoT, our investment in developing and packaging of research infrastructure (CHI-in-a-Box), as well as our support for composable systems that can both dynamically integrate resources from other sources into Chameleon and make Chameleon resources available via other systems. Lastly, I will describe the services and tools we created to support sharing of experiments, educational curricula, and other digitally expressed artifacts that allow science to be shared via active involvement and foster reproducibility.

Bio:

Kate Keahey is one of the pioneers of infrastructure cloud computing. She created the Nimbus project, recognized as the first open source Infrastructure-as-a-Service implementation, and continues to work on research aligning cloud computing concepts with the needs of scientific datacenters and applications. To facilitate such research for the community at large, Kate leads the Chameleon project, providing a deeply reconfigurable, large-scale, and open experimental platform for Computer Science research. To foster the recognition of contributions to science made by software projects, Kate co-founded and serves as co-Editor-in-Chief of the SoftwareX journal, a new format designed to publish software contributions. Kate is a Scientist at Argonne National Laboratory and a Senior Scientist The University of Chicago Consortium for Advanced Science and Engineering (UChicago CASE).

Hosts of Seminar:

Shane Canon, Data & Analytics Group

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Chameleon: An Innovation Platform for Computer Science Research and Education

Speakers:

Kate Keahey, Senior Scientist, CASE Affiliate

Abstract:

We live in interesting times: new ideas and technological opportunities emerge at ever increasing rate in disaggregated hardware, programmable networks, and the edge computing and IoT space to name just a few. These innovations require an instrument where they can be deployed and investigated, and where new solutions that those disruptive ideas require can be developed, tested, and shared. To support a breadth of Computer Science experiments such instrument has to provide access to a diversity of hardware configurations, support deployment at scale, as well as deep reconfigrability so that a wide range of experiments can be supported. It also has to provide mechanisms for easy and direct sharing of repeatable digital artifacts so that new experiments and results can be easily replicated and help enable further innovation. Most importantly -- since science does not stand still – such instrument requires the capability for constant adaptation to support an ever increasing range of experiments driven by emergent ideas and opportunities. The NSF-funded Chameleon testbed (www.chameleoncloud.org) has been developed to provide all those capabilities. It provides access to a variety of hardware including cutting-edge architectures, a range of accelerators, storage hierarchies with a mix of large RAM, NVDIMMs, a variety of enterprise and consumer grade SDDs, HDDs, high-bandwidth I/0 storage, SDN-enabled networking hardware, and fast interconnects. This diversity was enlarged recently to add support for edge computing/IoT devices and will be further extended this year to include LiQid composable hardware as well as P4 switches. Chameleon is distributed over two core sites at the University of Chicago and the Texas Advanced Computing Center (TACC) connected by 100 Gbps network – as well as three volunteer sites at NCAR, Northwestern University, and the University of Illinois in Chicago (UIC). Bare metal reconfigurability for Computer Science experiments is provided by CHameleon Infrastructure (CHI), based on an enhanced bare-metal flavor of OpenStack: it allows users to reconfigure resources at bare metal level, boot from custom kernel, and have root privileges on the machines. To date, the testbed has supported 6,000+ users and 800+ projects in research, education, and emergent applications. In this talk, I will describe the goals, the design strategy, and the capabilities of the testbed, as well as some of the research and education projects our users are working on. I will also discuss our new thrusts in support for research on edge computing and IoT, our investment in developing and packaging of research infrastructure (CHI-in-a-Box), as well as our support for composable systems that can both dynamically integrate resources from other sources into Chameleon and make Chameleon resources available via other systems. Lastly, I will describe the services and tools we created to support sharing of experiments, educational curricula, and other digitally expressed artifacts that allow science to be shared via active involvement and foster reproducibility.

Bio:

Kate Keahey is one of the pioneers of infrastructure cloud computing. She created the Nimbus project, recognized as the first open source Infrastructure-as-a-Service implementation, and continues to work on research aligning cloud computing concepts with the needs of scientific datacenters and applications. To facilitate such research for the community at large, Kate leads the Chameleon project, providing a deeply reconfigurable, large-scale, and open experimental platform for Computer Science research. To foster the recognition of contributions to science made by software projects, Kate co-founded and serves as co-Editor-in-Chief of the SoftwareX journal, a new format designed to publish software contributions. Kate is a Scientist at Argonne National Laboratory and a Senior Scientist The University of Chicago Consortium for Advanced Science and Engineering (UChicago CASE).

Hosts of Seminar:

Shane Canon, Data & Analytics Group

Jonathan Skone, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 2 participants

- 57 minutes

1 Feb 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

KubeFlux: a scheduler plugin bridging the cloud-HPC gap in Kubernetes

Speakers:

Claudia Misale, Research Staff Member in Hybrid Cloud Infrastructure Software Dept, IBM Research

Daniel J. Milroy, Computer Scientist, Center for Applied Scientific Computing, Lawrence Livermore National Laboratory

Abstract:

The cloud is an increasingly important market sector of computing and is driving innovation. Adoption of cloud technologies by high performance computing (HPC) is accelerating, and HPC users want their applications to perform well everywhere. While cloud orchestration frameworks like Kubernetes provide advantages like resiliency, elasticity, and automation, they are not designed to enable application performance to the same degree as HPC workload managers and schedulers. As HPC and cloud Computing converge, techniques from HPC can be integrated into the cloud to improve application performance and provide universal scalability. We present KubeFlux, a Kubernetes plugin based on the Fluxion open-source HPC scheduler component of the Flux framework developed at the Lawrence Livermore National Laboratory. We introduce the Flux framework and the Fluxion scheduler and describe how their hierarchical, graph-based foundation is naturally suited to converged computing. We discuss uses for KubeFlux and compare the performance of an application scheduled by the Kubernetes default scheduler and KubeFlux. KubeFlux is an example of the rich capability that can be added to Kubernetes and paves the way to democratization of the cloud for HPC workloads.

Bio(s):

Claudia Misale is a Research Staff Member in the Hybrid Cloud Infrastructure Software group at IBM T.J. Watson Research Center (NY). Her research is focused on Kubernetes for IBM Public Cloud, and also targets porting HPC applications to the cloud by enabling batch scheduling alternatives for Kubernetes. She is mainly interested in cloud computing and container technologies, and her background is on high-level parallel programming models and patterns, and big data analytics on HPC platforms. She received her master’s summa cum laude and bachelor’s degree in Computer Science at the University of Calabria (Italy), and her PhD from the Computer Science Department of the University of Torino (Italy).

Daniel Milroy is a Computer Scientist at the Center for Applied Scientific Computing at the Lawrence Livermore National Laboratory. His research focuses on graph-based scheduling and resource representation and management for high performance computing (HPC) and cloud converged environments. While Dan’s research background is numerical analysis and software quality assurance and correctness for climate simulations, he is currently interested in scheduling and representing dynamic resources, and co-scheduling and management techniques for HPC and cloud. Dan holds a B.A. in physics from the University of Chicago, and an M.S. and PhD in computer science from the University of Colorado Boulder.

Host of Seminar:

Shane Canon

Data & Analytics Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

KubeFlux: a scheduler plugin bridging the cloud-HPC gap in Kubernetes

Speakers:

Claudia Misale, Research Staff Member in Hybrid Cloud Infrastructure Software Dept, IBM Research

Daniel J. Milroy, Computer Scientist, Center for Applied Scientific Computing, Lawrence Livermore National Laboratory

Abstract:

The cloud is an increasingly important market sector of computing and is driving innovation. Adoption of cloud technologies by high performance computing (HPC) is accelerating, and HPC users want their applications to perform well everywhere. While cloud orchestration frameworks like Kubernetes provide advantages like resiliency, elasticity, and automation, they are not designed to enable application performance to the same degree as HPC workload managers and schedulers. As HPC and cloud Computing converge, techniques from HPC can be integrated into the cloud to improve application performance and provide universal scalability. We present KubeFlux, a Kubernetes plugin based on the Fluxion open-source HPC scheduler component of the Flux framework developed at the Lawrence Livermore National Laboratory. We introduce the Flux framework and the Fluxion scheduler and describe how their hierarchical, graph-based foundation is naturally suited to converged computing. We discuss uses for KubeFlux and compare the performance of an application scheduled by the Kubernetes default scheduler and KubeFlux. KubeFlux is an example of the rich capability that can be added to Kubernetes and paves the way to democratization of the cloud for HPC workloads.

Bio(s):

Claudia Misale is a Research Staff Member in the Hybrid Cloud Infrastructure Software group at IBM T.J. Watson Research Center (NY). Her research is focused on Kubernetes for IBM Public Cloud, and also targets porting HPC applications to the cloud by enabling batch scheduling alternatives for Kubernetes. She is mainly interested in cloud computing and container technologies, and her background is on high-level parallel programming models and patterns, and big data analytics on HPC platforms. She received her master’s summa cum laude and bachelor’s degree in Computer Science at the University of Calabria (Italy), and her PhD from the Computer Science Department of the University of Torino (Italy).

Daniel Milroy is a Computer Scientist at the Center for Applied Scientific Computing at the Lawrence Livermore National Laboratory. His research focuses on graph-based scheduling and resource representation and management for high performance computing (HPC) and cloud converged environments. While Dan’s research background is numerical analysis and software quality assurance and correctness for climate simulations, he is currently interested in scheduling and representing dynamic resources, and co-scheduling and management techniques for HPC and cloud. Dan holds a B.A. in physics from the University of Chicago, and an M.S. and PhD in computer science from the University of Colorado Boulder.

Host of Seminar:

Shane Canon

Data & Analytics Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 3 participants

- 47 minutes

13 Jan 2022

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

The Next Generation of High Performance Computing: HPC-2.0

Speaker:

Gregory Kurtzer, CEO of Ctrl IQ, Inc. and Executive Director of Rocky Enterprise Software Foundation / Rocky Linux

Abstract:

We’ve been using the same base architecture for building HPC systems for almost 30 years and while the capabilities of our systems have increased considerably, we still use the same flat and monolithic architecture of the 1990’s to build our systems. What would the next generation architecture look like? How do we leverage containers to do computing of complex workflows while orchestrating not only jobs, but data? How do we bridge HPC into the 2020’s and make optimal use of multi-clusters and federate these systems into a larger resource to unite on-prem, multi-prem, cloud, and multi-cloud? How do we integrate with these resources in a cloud-native compatible manner supporting CI/CD, DevOps, DevSecOps, compute portals, GUIs, and even mobile? This isn’t a bunch of shoelace and duct-tape on top of legacy HPC, this is an entirely new way to think about HPC infrastructure. This is a glimpse into HPC-2.0, coming later in Q1 of 2022.

Bio:

Gregory M. Kurtzer is a 20+ year veteran in Linux, open source, and high performance computing. He is well known in the HPC space for designing scalable and easy to manage secure architectures for innovative performance intensive computing while working for the U.S. Department of Energy and joint appointment to UC Berkeley. Greg founded and led several large open source projects such as CentOS Linux, the Warewulf and Perceus cluster toolkits, the container system Singularity, and most recently, the successor to CentOS, Rocky Linux. Greg’s first startup was acquired almost 2 years ago and now he is working on software infrastructure, including Rocky Linux as well as building a cloud native, cloud hybrid, federated orchestration platform called Fuzzball.

Hosts of Seminar:

Jonathan Skone, Advanced Technologies Group

Glenn Lockwood, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

The Next Generation of High Performance Computing: HPC-2.0

Speaker:

Gregory Kurtzer, CEO of Ctrl IQ, Inc. and Executive Director of Rocky Enterprise Software Foundation / Rocky Linux

Abstract:

We’ve been using the same base architecture for building HPC systems for almost 30 years and while the capabilities of our systems have increased considerably, we still use the same flat and monolithic architecture of the 1990’s to build our systems. What would the next generation architecture look like? How do we leverage containers to do computing of complex workflows while orchestrating not only jobs, but data? How do we bridge HPC into the 2020’s and make optimal use of multi-clusters and federate these systems into a larger resource to unite on-prem, multi-prem, cloud, and multi-cloud? How do we integrate with these resources in a cloud-native compatible manner supporting CI/CD, DevOps, DevSecOps, compute portals, GUIs, and even mobile? This isn’t a bunch of shoelace and duct-tape on top of legacy HPC, this is an entirely new way to think about HPC infrastructure. This is a glimpse into HPC-2.0, coming later in Q1 of 2022.

Bio:

Gregory M. Kurtzer is a 20+ year veteran in Linux, open source, and high performance computing. He is well known in the HPC space for designing scalable and easy to manage secure architectures for innovative performance intensive computing while working for the U.S. Department of Energy and joint appointment to UC Berkeley. Greg founded and led several large open source projects such as CentOS Linux, the Warewulf and Perceus cluster toolkits, the container system Singularity, and most recently, the successor to CentOS, Rocky Linux. Greg’s first startup was acquired almost 2 years ago and now he is working on software infrastructure, including Rocky Linux as well as building a cloud native, cloud hybrid, federated orchestration platform called Fuzzball.

Hosts of Seminar:

Jonathan Skone, Advanced Technologies Group

Glenn Lockwood, Advanced Technologies Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 2 participants

- 38 minutes

14 Dec 2021

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Characterizing Machine Learning I/O Workloads on Leadership Scale HPC Systems

Speaker:

Ahmad Maroof Karimi

A.I. Methods at Scale Group

National Center for Computational Sciences (NCCS) Division

Oak Ridge National Laboratory

Abstract:

High performance computing (HPC) is no longer solely limited to traditional workloads such as simulation and modeling. With the increase in the popularity of machine learn- ing (ML) and deep learning (DL) technologies, we are observing that an increasing number of HPC users

are incorporating ML methods into their workflow and scientific discovery processes, across a wide spectrum of science domains such as biology, earth science, and physics. This gives rise to a diverse set of I/O patterns than the traditional checkpoint/restart-based HPC I/O behavior. The details of the I/O characteristics of such ML I/O workloads have not been studied extensively for large-scale leadership HPC systems. This paper aims to fill that gap by providing an in-depth analysis to gain an understanding of the I/O behavior of ML I/O workloads using darshan - an I/O characterization tool designed for lightweight tracing and profiling. We study the darshan logs of more than 23,000 HPC ML I/O jobs over a time period of one year running on Summit - the second-fastest supercomputer in the world. This paper provides a systematic I/O characterization of ML I/O jobs running on a leadership scale supercomputer to understand how the I/O behavior differs across science domains and the scale of workloads, and analyze the usage of parallel file system and burst buffer by ML I/O workloads.

Bio:

Ahmad Maroof Karimi works as an HPC Operational Data Scientist in Analytics and A.I. Methods at Scale (AAIMS) Group in National Center for Computational Sciences (NCCS) Division, Oak Ridge National Laboratory. His current research focuses on the characterization of HPC I/O patterns and finding evolving HPC workload trends. He is also working on analyzing HPC facility data to characterize the HPC power consumption and building machine learning based job-aware power prediction models. Before joining ORNL, Ahmad completed his Ph.D. in Computer Science at CWRU, Cleveland, Ohio, in October 2020. His Ph.D. dissertation titled “Data science and machine learning to predict degradation and power of photovoltaic systems: convolutional and spatiotemporal graph neural networks” focused on classifying degradation mechanism and performance prediction of a photovoltaic power plant. He received his M.S. degree from the University of Toledo, Ohio, and B.S. degree from Aligarh Muslim University, India. Ahmad has also worked in the I.T. industry as a software programmer and database designer.

Hosts of Seminar:

Hai Ah Nam, Advanced Technologies Group

Wahid Bhimji, Acting Group Leader, Data & Analytics Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Characterizing Machine Learning I/O Workloads on Leadership Scale HPC Systems

Speaker:

Ahmad Maroof Karimi

A.I. Methods at Scale Group

National Center for Computational Sciences (NCCS) Division

Oak Ridge National Laboratory

Abstract:

High performance computing (HPC) is no longer solely limited to traditional workloads such as simulation and modeling. With the increase in the popularity of machine learn- ing (ML) and deep learning (DL) technologies, we are observing that an increasing number of HPC users

are incorporating ML methods into their workflow and scientific discovery processes, across a wide spectrum of science domains such as biology, earth science, and physics. This gives rise to a diverse set of I/O patterns than the traditional checkpoint/restart-based HPC I/O behavior. The details of the I/O characteristics of such ML I/O workloads have not been studied extensively for large-scale leadership HPC systems. This paper aims to fill that gap by providing an in-depth analysis to gain an understanding of the I/O behavior of ML I/O workloads using darshan - an I/O characterization tool designed for lightweight tracing and profiling. We study the darshan logs of more than 23,000 HPC ML I/O jobs over a time period of one year running on Summit - the second-fastest supercomputer in the world. This paper provides a systematic I/O characterization of ML I/O jobs running on a leadership scale supercomputer to understand how the I/O behavior differs across science domains and the scale of workloads, and analyze the usage of parallel file system and burst buffer by ML I/O workloads.

Bio:

Ahmad Maroof Karimi works as an HPC Operational Data Scientist in Analytics and A.I. Methods at Scale (AAIMS) Group in National Center for Computational Sciences (NCCS) Division, Oak Ridge National Laboratory. His current research focuses on the characterization of HPC I/O patterns and finding evolving HPC workload trends. He is also working on analyzing HPC facility data to characterize the HPC power consumption and building machine learning based job-aware power prediction models. Before joining ORNL, Ahmad completed his Ph.D. in Computer Science at CWRU, Cleveland, Ohio, in October 2020. His Ph.D. dissertation titled “Data science and machine learning to predict degradation and power of photovoltaic systems: convolutional and spatiotemporal graph neural networks” focused on classifying degradation mechanism and performance prediction of a photovoltaic power plant. He received his M.S. degree from the University of Toledo, Ohio, and B.S. degree from Aligarh Muslim University, India. Ahmad has also worked in the I.T. industry as a software programmer and database designer.

Hosts of Seminar:

Hai Ah Nam, Advanced Technologies Group

Wahid Bhimji, Acting Group Leader, Data & Analytics Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 3 participants

- 42 minutes

7 Dec 2021

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Ceph Storage at CERN

Speaker(s):

Dan van der Ster, Pablo Llopis Sanmillan

CERN

Abstract:

Ceph and its Reliable Autonomic Distributed Object Store (RADOS) offers a scale out storage solution for block storage (RBD), object storage (S3 and SWIFT) and filesystems (CephFS). The key technologies enabling Ceph include CRUSH, a mechanism for defining and implementing failure domains, and the mature Object Storage Daemons (OSDs), which provide a reliable storage backend via replication or erasure coding. CERN has employed Ceph for its on-prem cloud infrastructures since 2013. As of 2021, its storage group operates more than ten clusters totaling over 50 petabytes for cloud, Kubernetes, and HPC use-cases. This talk will introduce Ceph and its key concepts, and describe how CERN uses Ceph in practice. It will include recent highlights related to high-throughput particle physics data taking and SLURM storage optimization.

Bio(s):

Dan manages the Ceph storage at CERN in Geneva, Switzerland. He has participated actively in its community since 2013 and was one of the first to demonstrate its scalability up to multi-10s of petabytes. Dan is a regular speaker at Open Infrastructure events, previously acted as Academic Liaison to the original Ceph Advisory Board, and has a similar role in the current Ceph Board. Dan earned a PhD in Distributed Systems at the University of Victoria, Canada in 2008.

Pablo is a computer engineer at CERN, where he manages the IT department’s HPC service. He provides HPC support to both engineers of the Accelerator Technology Sector and to theoretical physicists. Pablo works on improving the performance of their HPC workloads, and on other projects such as the automation of operational tasks of the infrastructure. He holds a Ph.D. in computer science from University Carlos III of Madrid. In the past he has also collaborated with Argonne National Laboratory and IBM Research Zurich on HPC and cloud-related topics. His main areas of interest include high performance computing, storage systems, power efficiency, and distributed systems.Zoom:

Host of Seminar:

Alberto Chiusole

Storage and I/O Software Engineer, Data and Analytics Services

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Ceph Storage at CERN

Speaker(s):

Dan van der Ster, Pablo Llopis Sanmillan

CERN

Abstract:

Ceph and its Reliable Autonomic Distributed Object Store (RADOS) offers a scale out storage solution for block storage (RBD), object storage (S3 and SWIFT) and filesystems (CephFS). The key technologies enabling Ceph include CRUSH, a mechanism for defining and implementing failure domains, and the mature Object Storage Daemons (OSDs), which provide a reliable storage backend via replication or erasure coding. CERN has employed Ceph for its on-prem cloud infrastructures since 2013. As of 2021, its storage group operates more than ten clusters totaling over 50 petabytes for cloud, Kubernetes, and HPC use-cases. This talk will introduce Ceph and its key concepts, and describe how CERN uses Ceph in practice. It will include recent highlights related to high-throughput particle physics data taking and SLURM storage optimization.

Bio(s):

Dan manages the Ceph storage at CERN in Geneva, Switzerland. He has participated actively in its community since 2013 and was one of the first to demonstrate its scalability up to multi-10s of petabytes. Dan is a regular speaker at Open Infrastructure events, previously acted as Academic Liaison to the original Ceph Advisory Board, and has a similar role in the current Ceph Board. Dan earned a PhD in Distributed Systems at the University of Victoria, Canada in 2008.

Pablo is a computer engineer at CERN, where he manages the IT department’s HPC service. He provides HPC support to both engineers of the Accelerator Technology Sector and to theoretical physicists. Pablo works on improving the performance of their HPC workloads, and on other projects such as the automation of operational tasks of the infrastructure. He holds a Ph.D. in computer science from University Carlos III of Madrid. In the past he has also collaborated with Argonne National Laboratory and IBM Research Zurich on HPC and cloud-related topics. His main areas of interest include high performance computing, storage systems, power efficiency, and distributed systems.Zoom:

Host of Seminar:

Alberto Chiusole

Storage and I/O Software Engineer, Data and Analytics Services

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 7 participants

- 1:06 hours

6 Dec 2021

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

funcX: Federated FaaS for Scientific Computing

Speaker:

Ryan Chard

Data Science and Learning Division

Argonne National Laboratory

Abstract:

Exploding data volumes and velocities, new computational methods and platforms, and ubiquitous connectivity demand new approaches to computation in the sciences. These new approaches must enable computation to be mobile, so that, for example, it can occur near data, be triggered by events (e.g., arrival of new data), be offloaded to specialized accelerators, or run remotely where resources are available. They also require new design approaches in which monolithic applications can be decomposed into smaller components, that may in turn be executed separately and on the most suitable resources. To address these needs we present funcX—a distributed function as a service (FaaS) platform that enables flexible, scalable, and high-performance remote function execution. funcX's endpoint software can transform existing clusters and supercomputers into function serving systems, while funcX's cloud-hosted service provides transparent, secure, and reliable function execution across a federated ecosystem of endpoints. We demonstrate the use of funcX with several scientific case studies and show how it integrates into the wider Globus ecosystem to enable secure, fire-and-forget scientific computation.

Bio:

Ryan Chard joined Argonne in 2016 as a Maria Goeppert Mayer Fellow and then as an Assistant Computer Scientist in the Data Science and Learning Division. He now works with Argonne, UChicago, and Globus to develop cyberinfrastructure to enable scientific research. In particular, he works on the Globus Flows platform to create reliable data analysis pipelines and the funcX service to enable function serving for HPC. He has a Ph.D. in computer science and an M.Sc. from Victoria University of Wellington, New Zealand.

Host of Seminar:

Jonathan Skone, Advanced Technologies

Bjoern Enders, Data Science Engagement Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

funcX: Federated FaaS for Scientific Computing

Speaker:

Ryan Chard

Data Science and Learning Division

Argonne National Laboratory

Abstract:

Exploding data volumes and velocities, new computational methods and platforms, and ubiquitous connectivity demand new approaches to computation in the sciences. These new approaches must enable computation to be mobile, so that, for example, it can occur near data, be triggered by events (e.g., arrival of new data), be offloaded to specialized accelerators, or run remotely where resources are available. They also require new design approaches in which monolithic applications can be decomposed into smaller components, that may in turn be executed separately and on the most suitable resources. To address these needs we present funcX—a distributed function as a service (FaaS) platform that enables flexible, scalable, and high-performance remote function execution. funcX's endpoint software can transform existing clusters and supercomputers into function serving systems, while funcX's cloud-hosted service provides transparent, secure, and reliable function execution across a federated ecosystem of endpoints. We demonstrate the use of funcX with several scientific case studies and show how it integrates into the wider Globus ecosystem to enable secure, fire-and-forget scientific computation.

Bio:

Ryan Chard joined Argonne in 2016 as a Maria Goeppert Mayer Fellow and then as an Assistant Computer Scientist in the Data Science and Learning Division. He now works with Argonne, UChicago, and Globus to develop cyberinfrastructure to enable scientific research. In particular, he works on the Globus Flows platform to create reliable data analysis pipelines and the funcX service to enable function serving for HPC. He has a Ph.D. in computer science and an M.Sc. from Victoria University of Wellington, New Zealand.

Host of Seminar:

Jonathan Skone, Advanced Technologies

Bjoern Enders, Data Science Engagement Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

- 8 participants

- 1:07 hours

30 Nov 2021

NERSC Data Seminars Series: https://github.com/NERSC/data-seminars

Title:

Tiled: A Service for Structured Data Access

Speaker:

Dan Allan, NSLS-II - Brookhaven National Laboratory

Abstract:

In the Data Science and Systems Integration Program at NSLS-II, we have explored various ways to separate I/O code from user science code. After seven years of developing in-house solutions and contributing to external ones (including Intake), we propose an abstraction that we think is a broadly useful building block, named Tiled. Tiled is a data access service for data-aware portals and data science tools. It has a Python client that feels much like h5py to use and integrates naturally with dask, but nothing about the service is Python-specific; it also works from curl. Tiled’s service sits atop databases, filesystems, and/or remote services to enable search and structured, chunk-wise access to data in an extensible variety of appropriate formats, providing data in a consistent structure regardless of the format the data happens to be stored in at rest. The natively-supported formats span slow but widespread interchange formats (e.g. CSV, JSON) and fast, efficient ones (e.g. C buffers, Apache Arrow Tables). Tiled enables slicing and sub-selection to read and transfer only the data of interest, and it enables parallelized download of many chunks at once. Users can access data with very light software dependencies and fast partial downloads. Tiled puts an emphasis on structures rather than formats, including N-dimensional strided arrays (i.e. numpy-like arrays), tabular data (i.e. pandas-like“dataframes”), and hierarchical structures thereof (e.g. xarrays, HDF5-compatible structures like NeXus). Tiled implements extensible access control enforcement based on web security standards, similar -to JupyterHub Authenticators. Like Jupyter, Tiled can be used by a single user or deployed as a shared resource. Tiled facilitates local client-side caching in a standard web browser or in Tiled’s Python client, making efficient use of bandwidth and enabling an offline “airplane mode.” Service-side caching of "hot" datasets and resources is also possible. Tiled is conceptually “complete” but still new enough that there is room for disruptive suggestions and feedback. We are interested in particular in exploring how Tiled could be made broadly available to NERSC users alongside traditional file-based access, and how that work might prompt us to rethink aspects of Tiled’s design.

Bio:

Dan Allan is scientific software developer and group lead in the Data Science and Systems Integration Program at NSLS-II. He joined Brookhaven National Lab as a post-doc in 2015 after studying soft condensed-matter experimental physics and getting involved in the open source scientific Python community. He works on data acquisition, management, and analysis within and around the "Bluesky" software ecosystem.

Host of Seminar:

Bjoern Enders

Data Science Engagement Group

National Energy Research Scientific Computing Center (NERSC)

Lawrence Berkeley National Laboratory

Title:

Tiled: A Service for Structured Data Access

Speaker: